We talk to Kevin Riggle (@kevinriggle) about complexity and safety. We also talk about the Twitter acquisition. While recording, we discovered a new failure mode where Kevin couldn’t hear Thomas, but David and Deirdre could, so there’s not much Thomas this episode. If you ever need to get Thomas to voluntarily stop talking, simply mute him to half the audience!

This rough transcript has not been edited and may have errors.

Deirdre: Hello. Welcome to Security Cryptography Whatever. I’m Deirdre

David: I’m David.

Thomas: I’m the weird radio that everyone thinks is happening in the background, but there’s no radio here. I don’t understand where this is coming from.

Deirdre: Uh, and we’re here today with our special guest, Kevin Riggle. Hi Kevin.

Kevin: Hi there. Great to be here.

Deirdre: Thank you for joining us. This is very, uh, interesting to me cuz this is my, one of my little like, I wanna talk about complexity of software and complexity of the things that we build with software, kind of from a security perspective, because like we care about building secure things with our software, but this is also in the context.

David: Some of us do.

Deirdre: Yeah, well, well,

David: Three of the four.

Deirdre: three out of the four wanna build secure software systems. This has also just happened to align with, um, one of our favorite distributed complex systems, twitter.com, this like, free website, being like, torn to shreds, theoretically, by a malicious takeover one could say. So it’s, uh, interesting to talk about like how do you build these things and how do you keep them operating safely or securely, whatever that means for safety or security.

So Kevin, um, can you tell us a little bit about what you do in terms of like building secure systems, but also safety critical systems?

Kevin: Well, so the idea of safety, uh, is something that we all have in a sort of like intuitive feeling for like a roller coaster feels unsafe, but we know at least intellectually that they’re pretty safe, and like crossing the street sort of like feels fairly safe, but is, you know— and so, okay, so maybe we don’t have a great intuitive sense of this

we have at least a rough one. Like, you know, you see a bear, you know, if it’s not at the zoo, you count her out in the wild, it’s probably not that safe, but, you know, like, uh, milk and cookies, probably safe allergies— anyway. But the field of system safety actually defines it a little bit more specifically.

This is Professor Nancy Leveson at MIT, who I’ve worked, I’ve learned a lot from over the years. Um, and she defines safety as an unacceptable loss. Now, as soon as I say that to people, they immediately ask "Unacceptable to whom?"

Deirdre: Yeah.

Kevin: is, that’s where the politics comes in. So we’ve almost immediately encountered it.

And so I got into this about 10 years ago now. My, I was working for Akamai. I started as a software engineer on the InfoSec team working for a guy named Michael Stone. And I almost immediately got distracted by the much more interesting, like security problems, uh, and did not want to, uh, uh, write Michael’s wiki.

It we’re, we’re great friends. Still, he’s still just a tiny bit salty, I think. Um, sorry but Akamai had at that time had a couple like really bad incidents and Michael had what I kind of described as a crisis of, you know, confidence. Like is it possible for us to really build these systems, the safe— you know, safely at the scale that we’re operating at. Cuz Akamai was at that point, like 150,000 servers in 110 countries. We were delivering about a quarter of the traffic on the web on any given day. I mean, you were, you were at Akamai, uh, in the same

Deirdre: Yeah, I was, yeah.

Kevin: Uh, Deirdre and so, uh, uh, couple really bad incidents where it took us a long time to figure out what was going on.

And Michael. Is this even possible? Or should we turn this whole internet thing off? Go farm potatoes in Vermont, like, would that be better, you know, for like humanity. And John Allspaw, uh, had just published his paper or his blog post about, uh, "Just Culture" at Etsy, which introduced the idea of blameless postmortems.

And Michael went and pulled on that thread and discovered… so Nancy Leveson, Professor Leveson’s book, “Engineering A Safer World”, was then in preprint, uh, Was like, wait a minute, she’s at MIT, literally blocks from our office. Uh, she has a, like a yearly, like the STAMP workshop, this like safety engineering conference.

So he like showed up to, it was like, this seems interesting. I read the book was like, I wonder if we can use this in software.

Deirdre: Yeah.

Kevin: And I was very skeptical of this, but he convinced me to read the book and I had read the book and I was like, oh wait, this is, explains a lot of things that I’ve observed but didn’t have language for.

Deirdre: Yeah.

Kevin: And uh, shortly thereafter we got given the governance of the incident management process at Akamai, which was a great thing to give somebody who is deep into this stuff, trying to figure out how to use it. So I helped him run the incident process there and we applied some of the lessons from the book very productively.

Ultimately, like I was really proud of how that worked out and we started to figure out ways that we could use this in software, but, or like Deirdre, if you wanna jump in. I don’t want to,

Deirdre: Yeah, so like specifically there’s stuff you can learn or like things you can apply in like a postmortem setting. Um, do you have any like top level, like, these are the things that I bring to a postmortem setting, but then I wanna ask about like, how do we use these things constructively while we’re designing and building systems?

Kevin: Yeah. So that’s in fact the sort of like two ways that we find it as really useful. And there are in fact two like whole techniques that are laid out in the book. Uh, one of which is basically, uh, like how to do postmortems and the other of which is like how to, you know, do design analysis basically of stuff that you are building.

Both of these techniques are like really fairly intensive and we couldn’t convince anybody to let us take a couple of months to do a design analysis or to do an incident, uh, investigation that was just gonna be a non-starter, so we had to sort of Hmm.

David: What do you mean by like design analysis or even instant investigation in this case? Cause a like large tech company that I will not name that has a web browser, does design docs and does blameless postmortems, and there are still security bugs that are patched as rapidly as possible.

and so, great?

Kevin: Yeah. Well, well, well, that’s, that’s the thing. I mean, so neither of these are, um, neither of these are silver bullets, but it also matters how you do, you know, these design analyses and these post-mortems and blamelessness is just one piece of it. And like, so like when I left Akamai, I was like, this stuff that we’re working on is, like super cool, but nobody else is working on it. Like I was talking on it about it on Twitter some, people were interested, but there wasn’t huge community. And then I came out to San Francisco, started at Stripe and everyone was talking about blameless postmortems and I was like, why are you talking about that?

It’s cool, like, and if you were to take only one thing from all of this whole body, of this whole body of literature and practice, like that’s the thing to take. Where did you get it from? And that was when I worked out that like they had also all read the John Allspaw, uh, "Just Culture" blog post the same way that Michael had. So that was, I mean, it was, that was a really pleasant thing to find.

So the core insight of this is that, most really bad accidents don’t happen because, uh, of like what I’ll call component failures. You know, the question of like where you draw the abstraction boundaries is a little weird, but like, they don’t happen because of things that are, easy to characterize, you know, a bolt shears, a belt snaps, you know, there’s a sign error in one line of code. The really bad accidents happen because, uh, multiple subsystems, which are both operating correctly according to their designer’s intent, interact in a way that their designers don’t foresee, uh, which can be as simple as, you know, subsystem A is spec’d to output values in metric, and subsystem B expects input in US units.

And if they talk with each other, you don’t expect it, kaboom.

Deirdre: Mm-hmm. Which one of the Mars probes smashed into the Mars surface at like 300 miles an hour, because one component was in metric and one component was in Imperial units or

Kevin: I always forget if it was the Mars Polar Lander or the Mars Reconnaissance Orbiter.

Deirdre: Yeah.

Kevin: it was the Mars Polar. No one of them had a, an issue with like some sensor. One of them had this like units issue and they both happened around the same time. But yeah, it’s exactly a real example. And uh, it’s one of the ones that Nancy uses in her book.

And it’s also a great example because NASA actually did the, you know, the postmortem on it and they were like, you know, so on the one hand, Lockheed screwed up, the whole system is spec’d in metric. We don’t know why they decided to build this in US units, but they did. On the other hand, like we should have noticed that like,

Deirdre: Yeah.

Kevin: We should have noticed they passed all of our, you know, conformance testing. So that’s, it’s really, really, it’s on us.

David: How close is like NASA to, I don’t know, let’s say like the theoretical like principles of the, the book you were talking about earlier, like if any organization was, doing it correctly in terms of safety, like you would expect NASA to do it, and yet, you know, they, they still miss this. So are, are, are they doing that?

Like, am I wrong that, uh, they would be perhaps like more risk averse and more safety oriented than others? Or are they just doing that in a different way or something else?

Kevin: Uh, it varies. Deirdre just pulled out first "The Challenger Launch Decision" book and then "Normal Accidents", which has the Challenger picture on the cover. Uh, and Nancy Leveson was actually part of the Columbia accident investigation board, so she applied some of, you know her techniques there.

It varies. Um, I think NASA does a pretty good job, and some of it is just like the political whims of the day, depending on who’s in charge of NASA at that point in time, like how good a job they do. Commercial aviation, uh, is the other place that does a really good job of this. Commercial aviation, the international aviation system is probably the safest large scale system that humanity has ever built and

Deirdre: makes sense cuz you kind of, you’re doing this very like unhuman thing of just like get in this tin can and you’re gonna go in the air 30,000 feet in the air and we, we swear we’re gonna get you there safely, you and your children and you, we won’t fall out of the sky and land on your house

Kevin: it’s, It’s enormous. Yeah. And it has to work correctly. You’ve got these enormous, you know, mechanical systems, airplanes, and then you’ve got the infrastructure on the ground supporting them. And then you’ve got the social, like infrastructure of, uh, the airlines. And then you’ve got the, like, bureaucratic infrastructure of all of the national regulators, like the FAA, and the NTSB. And then you’ve got like ICAO and IATA at the international level. Uh, and you know, there is an airport in every country in the world and they all need to inter operate. And it’s just like, it makes the internet look kind of small actually.

Deirdre: Yeah.

Kevin: Uh, we’ll get to like why the internet is, you know, differently challenging in a minute, but like, yeah, you look at the scale of that.

The other glass case example program is the US Navy SUBSAFE Program. Uh, and that is in many ways a much simpler system. You do still have things operating at more of those scales, but it’s really just the US, uh, and the whole, like the SUBSAFE program’s, whole thing is, we just need the subs to be able to get back to the surface.

Like what— and, and so that’s where they, they ask this question basically like, what invariants need to be true about this system in order for it to be safe? And they’re like, the sub always needs to be able to get back to the surface. And so then they, you know, the engineering in order to accomplish that is, still, you know, a lot of work, but they’ve got like really one core problem to solve and that’s how they solve it.

Whereas aviation has like a bunch of core problems to solve. And Nancy’s lab also, Nancy’s lab actually did the spec for TCAS2, which is the traffic collision avoidant system, mark two, uh, which is the system that causes planes to not run into each other in midair. She says she’s never doing it again. Uh, the experience of doing it was just, was just too much.

They came up with, uh, you know, the story she tells us basically that like, you know, okay, so we don’t want planes to run into each other in mid-air. Well, what if we only let one plane take off at a time? That’ll solve the problem, right? Like, let’s go, go. Great lunch. Uh, we’re done. Wait. Oh no. We need multiple planes to take off at a time?

Okay, well maybe we only let one plane take off per… country, right? We’re done, right? No. Oh, wait, and, and so what they eventually worked it down to is that the planes need to be separated by either a quarter mile of lateral distance or a thousand feet of vertical elevation. And so they just put radios on all the planes, which scream their lateral position and their altitude at each other, and that there are alarms in the cockpit which go fire in increasing levels of severity as those envelopes get—

because they’re like, as long as, you know, given the speeds that we’re traveling at, you know, and the physics of the system that we’re working with, as long as those invariant, as long as that invariant is maintained, then this will be safe, at least on this dimension.

Deirdre: that’s beautiful because it’s a very simple invariant for a very— complex systems with all these independent actors that may change their behavior over time and with whatever they’re doing. But like the one thing is you have to be at least this far away from each other according to the ground.

You have to at least at this far away from each other in the air vertically, and then you’re

Kevin: done.

Yeah, exactly. Yeah, yeah.

David: In order to never use-after-free, I will simply only malloc, never free.

Kevin: right. Well, yes, yes. The invariant that like, memory… and I forget exactly how you’d phrase it, but like this invariant basically that, you know, or the invariants that our memory safe languages enforce around the use and freeing of memory are these kinds of things.

Thomas: So I have a question for you. The book, the book we’re talking about, are we talking about "Just Culture", right?

Kevin: Uh, "Engineering a Safer World".

Thomas: Okay. My question is like, I feel like the, the sub safety program is interesting cuz you don’t generally think about like the unified, single, simplified goal of safety. That’s, that’s a, that’s a cool thing, right?

And like, TCAS is kind of interesting too. But like, if you were to come up with like the textbook comparison between software engineering, other disciplines, everybody would say aviation versus software and make that comparison, right? So like on its face, we, we know like that aviation does, aerospace does things differently from software engineering.

Right. But like what’s the thing that software engineering can effectively learn from aerospace? Like what’s the doable thing?

Kevin: Well, one of the doable things is, or— we found at Akamai that the, what was important was not so much picking up the particular techniques, you know, this like, uh, STPA is the name of the design review technique that Nancy has and uh, CAST is the name of the accident investigation technique, but they’re both designed around aviation where, you know, the Dreamliners spent a decade in development, Boeing 787.

So it was fine if they took like six months or a year or to like do a safety analysis of that design, like

David: I mean, it didn’t really seem to be fine. Cause that plane didn’t work for like several years after it was

Kevin: released

But there weren’t any

David: Like does it, is it even in service now? Like are there

Kevin: Oh, absolutely.

David: now that are like not

Kevin: There weren’t any deaths. Uh, the 737 MAX debacle is actually the one where they fucked this up, and I’m still mad at them about this, and they’re

Deirdre: The Dreamliner was like, Boeing’s last good. That seems to be Boeing Company’s great Last plane. And like everything after that has been like, oh no. What did they do to Boeing?

Kevin: yeah. Well, my understanding is that McDonald bought. They bought McDonald and the McDonald execs wound up running the show, which is the way it works at these

Deirdre: got consumed . There’s a great book about this, and I’ll link it in the show notes. Yes,

Kevin: interesting. Okay. There’s also, uh, I think that there’s a documentary on Netflix about the 737 Max that I haven’t watched yet, but I need to watch.

Yeah. Fun fact, the CEO of Boeing at the time of the debacle went to the same high school as I did. Which is I, it’s a town of 6,000 people. You know, my graduating class was 60 people. It is in the middle of cornfields in rural Iowa. Nobody interesting, like a lot of interesting people, but nobody, like anybody I’ve ever heard of comes from there to first order.

We have like a football player, and Dennis Muilenburg, the CEO of Boeing, when they royally fucked up and.

Deirdre: Oh no.

Kevin: Me??? And that’s it. Like, yeah. So yeah, it’s, as soon as I’ve heard that, I was like, oh, they’re fuuucked.

Thomas: So we

Kevin: And the new CEO who replaced Muilenburg is still like blaming the pilots for this. I’m like, dude, you shipped them a plane.

Deirdre: right?

Kevin: And you trained them on it. And if that plane and that training were insufficient, that’s on you. Like you cannot be like, oh, the, you know, the pilots needed more training, in the US they would’ve had more training. Like you sold them that thing.

Deirdre: Yeah. So for software, we can do a lot of things faster, easier. We could just ship things more quickly than a fuselage with wires in it, with people in it, uh, that has to have a radi— we can do all these things much faster and easier, and usually with fewer people to get something working, working, than a plane or, you know, anything else.

Quote, how do we do like safety culture, kind of the way we would do it for, you know, aviation, but with like, I can, I can type some type-itdy types into my computer and then I’m done. Right?

Kevin: Well, so what you talk about there about this, the, our ability to like iterate and our ability to ship stuff quickly, that’s a challenge because you can get yourself into trouble real fast. It’s also a blessing, uh, because it’s much easier to fix things. You know, you think about, how challenging it was for Boeing to replace the batteries in the, you know, 787.

That was a nightmare. Whereas, you know, we can ship software updates, you know, much more easily. And you know, the way that we’ve moved to like CI/CD for, you know, test and deployment and continuous delivery, all those like blue/green stuff is brilliant. Like that’s a thing the aviation people are kind of mad that they can’t do that.

And they are sometimes looking for ways to do more of that. In fact, that’s one of Tesla’s real actual, uh, that’s one of the things that they get actually, I think right, is that they are just constantly shipping updates to the cars. I don’t think a whole lot of their safety engineering otherwise, but like, um, that’s a whole ‘nother podcast.

But the, the stuff they do on the software side is actually really smart.

Deirdre: So we’re kind of drawing from this nice post that you wrote a couple of years ago and it’s basically like, these are the top five reasons why safe software is harder, or software safety, which is, you know, dovetails with software security, but it’s not the same thing as software security that we, we kind of been talking about on this podcast a lot and a lot of other people talk about, and so we touched on one of those things is that you can do stuff quicker in software, which is both a plus and a minus.

You can make something that’s broken faster with fewer people and get it to, to customers, and you can fix it faster and easier and with fewer people working on it. One of the ones, and then the other one is like, you can, one, you need fewer people, and two, it moves faster. But the one that that kind of leads me to wanting to talk to you today is the, "the software is more complex" part, which is like you, you mentioned this earlier, which is basically: these big failures, kind of like the Mars robot crashing into the planet because one subsystem did not interact well with another subsystem.

Even though those independent subsystems, those individual components passed all their specs, spec, all passed all their compliance, like all their unit tests work, and then the integration test never happened? Or

Kevin: They passed the integration test too. Yeah. It’s just that once that got out into the real world, the real world integration test…

Deirdre: yeah, the thing that might be harder to run before you deploy the thing. Um, and this is sort of the, the interaction failure.

This is sort of the, um, there was another post. it. I can’t remember who came first. It was either Nelson Elhage, we, we also know, or it was, um, Chris Palmer who was at Google Chrome Security. Um, one of them, I think they both wrote this post. This is basically like security is found in the, where the abstraction boundaries overlap, where the assumptions that— because abstraction boundaries are fundamentally human constructions where you just sort of wrap a bunch of complexity about what the thing is doing under sort of like, don’t, don’t you worry about that. You just worry about this part. And then the security or the safety comes in where, oh no, you actually have to worry about those complexities that you have abstracted away because you didn’t quite do it right?

Kevin: Because all abstractions leak, necessarily. Yes. The world, I mean, So, yeah, an abstraction is always a simplification of the world. You know, we say that uh, all models are wrong, but some are useful. All abstractions are wrong, but some are useful. And the values that they provide is because that they obscure some of what’s going on underneath.

But that is also means that sometimes, sometimes what’s go, you know, going on underneath matters and, that what’s going on underneath is also a source of like those unplanned interactions where if you don’t understand that, you know, you’re using a library which defaults to, you know, US units, when you build the thing, like, uh, it might never occur to you that you’re shipping something, you know, with the wrong units because I don’t know, we, you know, they gave us three libraries to choose from, we picked that one and it seemed fine.

So, yeah, the, the way that on the internet in particular, like everything, is effectively next to everything else, uh, is an incredibly potent source of these unplanned interactions or unsafe interactions because the, the physics of the internet is just so different from the world that we’re used to, and we have to actually go to like, incredible lengths to make the physics of the internet work, you know, a little bit like the physics of the real world that we’re used to, uh, if we want it to be really hard to get from point A to point B, we have, but still, like possible we have to put up firewalls and, you know, um, VPNs and all this kind of

Deirdre: and this is, this is the power of the Internet and the power of software is that like, this is like, you know, a double edged sword. Like it’s incredibly fast. It takes things that are very far away from each other and they’re able to interact as if they were right on top of each other in the same computer, but then

Kevin: But then they can interact with each other, like they’re right on top of each other in the same computer. And like, if you don’t want that or if that interaction is bad, then now you have a bad interaction happening right here where like, yeah, this is a thing that I say about like how our password advice has changed.

Where like back in the day, you know, in the nineties, what we were worried about and the reason we told people not to write down their passwords, is that the threat model was that somebody was gonna stay late after work, walk down the hall to the CFO’s desk, turn over his keyboard, find his password, and log into the company financial system and like wire themselves a bunch of money.

These days, we are much more worried about, uh, some Russian hackers knocking over a Minecraft forum, taking the password dump from there, the unencrypted password dump, and you know, running it against the company’s financial system from Russia. And so writing your password down is great. Probably still don’t stick it under your keyboard, but like, maybe stick it in your wallet.

Like, that’s fine, especially if writing it down means that you can use a much longer password and you know, one that you don’t use elsewhere. So that’s a place where the network, the adjacency, the weird physics of the internet has had a big impact on safety.

Deirdre: The other interesting part that you wrote is, it’s these abstraction issues and the fact that software makes things both easier and faster, and like localities just kind of collapse and things like that. But also feedback loops where if you’re just writing a program like a straight up program, you might not have many quote feedback loops and they might be very tightly controlled and they may, they’re just, you don’t, they don’t even matter.

But when you have a more complex software system such as a browser or a server of certain complexity or a distributed system since like a, um, a most massive microservice-based, push-publishing, relaying, sort of, uh, publishing platform, feedback loops become so important and whether your a thing actually crawls to a halt or, or works at all.

Definitely one of those things that’s like you kind of level up between software engineering and systems design.

Kevin: Yes. Yes, exactly. Those places where, well on the security side where an adversary can do a little bit of work and get, you know, big amplification because there is some, you know, some sort of positive feedback loop that defenders don’t want, is a big deal. You know, you think about going viral as you know, again, both a positive and negative example, we can think of. And then at the systems level, every, I think we’ve all like had the moment where like, oh, some queue blew up because a process, you know, didn’t get the sort of like pushback that it needed to be like, no, slow down. Stop sending this to me. And so it just, you know, kept going and you know, or that blew up something downstream, blew up, something down stream.

Carefully managing those feedback loops is the core of, and that the reason that we want these invariants over these systems is about sort of like expressing the bounds of our feedback loops, basically. You know, you think about a nuclear reactor as the glass case example of a like feedback loop that needs to be very, highly controlled within certain bounds, or you know,

Deirdre: you want those control rods to be in, but not too far in. And you want the water to flow, but you don’t wanna flow too much, and you want the power to turn on the turbines to turn the water and,

Kevin: but not too much. Yes.

Deirdre: but not too much. And sometimes you’re powering the turbine with the power from the reactor.

Kevin: now we have a, now we have second order feedback loops.

Deirdre: yeah,

Kevin: Or third order feedback loops. And Yes.

Deirdre: So, alright.

Kevin: And some were at the top level. There’s like the social feedback loops and the political feedback loops and, yeah.

Deirdre: Yeah. So I guess this sounds hard? And, and complicated? So how, what do we do?

Kevin: do we

David: Well, before, before we get into that, I don’t, I mean, is this where I don’t really think this is any different than any other field like, like certainly there, there are, we’ve enumerated a lot of causes of complexity and how that relates to security and software. But like, there are weird, dumb complexities in every other field, right?

Like we, you know, your soil freezing slightly differently can destroy your ability to be resistant to an earthquake. Um, or like weird edge cases in oil rigs can cause explosions. And it’s not like all of these have like elegant fixes. Like maybe you’re back at the drawing board for years, or maybe you build a building in Soma and then it turns out it’s sinking into the swamp.

Or maybe your solution for the oil rig is the fact that like your pump doesn’t fit in the room is you cut a hole in the ceiling, right? Like there’s all of this kind of stuff happening in every other field. It’s not like, it’s not clear to me that software’s any different.

Kevin: I think software is, you know, I think software is a little bit more, uh, on certain of these dimensions that we’ve talked about, you know, that I talk about in the blog post. There is, uh,

David: for sure. It has its own idiosyncrasies,

Kevin: well there’s, there’s how fast it moves. There is, you know, how much power is in an individual’s or small team’s hands, there is these weird physics that we’re talking about of the internet, and I think that that’s the, you know, if there’s maybe one thing at which makes, you know, software a sort of phase change, especially software on the internet where we’re operating at a level of complexity that, you know, more traditional fields, aren’t, it is that weird physics of the internet, although of course also like those fields are becoming increasingly networked. And so they’re starting to have many of the same challenges that we do. And then it’s also, you know, in the, the security context that just consequences for adversaries are a lot lower when they’re, you know, sending packets from Russia, whether rather than when they’re on the same plane, it’s everybody else.

And so those are the places where there’s.

David: I, I, I totally agree with you that there like are, you know, like specific differences about software, but I don’t think it’s like, it doesn’t seem to me that there’s like any fundamental like, difference with any other field. There’s just like the shape of the problem. There’s the software shape of the problem, there’s the chemical engineering shape of the problem, there’s the building shape of the problem.

And so like, like what do we do with

Kevin: Well, yeah, and you’re exactly right. And that means that the same techniques that these other fields have been working on for decades can apply in software as well. Or we can take the underlying sort of philosophy, some of the underlying sort of understandings about, oh, the world works in different ways than we thought it did, uh, and apply them in software.

And so that was where we found we couldn’t apply the sort of CAST or STPA you know, techniques directly, uh, because they were, you know, built for a Boeing world. But if we took the idea of like, oh, we should start looking for these like unsafe interactions, we should stop doing root cause analysis, because there is no one root cause.

There are root causes, and even there we are sort of like have to accept that we’re picking a stopping point. And you know, and human error is not a root cause. Human error doesn’t really exist. Uh, we have the best humans available. Uh, now what? We have to build our systems with the foibles and, uh, uh, failings that we know we have and that are, we’re like, are actually fairly well-characterized at this point.

And so

Deirdre: Yeah. We, we have to price in that humans gonna human and then try to design resilient systems, uh,

Kevin: just resilient, but systems that are safe, absolutely provably safe in the presence of those

Deirdre: Yeah, not, not just that they’ll continue to operate, but like what does operate mean in a safety or security sort of

Kevin: Right. And in fact we find, so there’s a great study out of the UK actually, and it has the awful title of like something like "From Safety One to Safety Two" Hollnagel is the name, h o l l n a g e l, and another one of the insights, and this is gonna be very relevant to a certain billionaire who, uh, bought himself a social network without understanding it, is that so, so they did this study of nurses, right?

Uh, and the first study they did, was trying to figure out why things went wrong. They analyzed a bunch of sort of like accidents and they said, okay, well we found all these places where nurses didn’t follow the procedures or checklists. And so clearly that’s … problem. Uh, but then some smart cookie got the idea to do a follow up study, and they studied a bunch of near-misses and they, what they expected to find was that in those cases, uh, the nurses had followed the checklist and the checklist had caught it, or they had followed the procedures and the procedure had caught it.

What they found was no.

Deirdre: just by happenstance it was just like,

Kevin: Well, not by happenstance. Uh, the nurses in the failure cases had a mental model of what was going on that was different than what was actually going on. And so they were doing the correct thing according to their mental model of the situation, which wasn’t the situation on the ground.

And, in the situations where they, there were near misses, what the nurses, uh, had a better mental model of the situation on the ground than the checklist did or the procedure did, and were able to break the rules in order to ensure the safety of the patient.

Deirdre: Oh, that’s wild. Okay. Like

Kevin: Yeah.

Deirdre: what do, okay, what do we do with that? Like, what does that mean? Like if our check, okay, so like we’re making the checklists, the checklist is wrong, like, so.

Kevin: This is why blameless postmortems are important, uh, because they encourage us to ask, you know, what made this make sense to the people at the pointy end at the time? This is why cultures, which explicitly acknowledge that this checklist is a tool, and, you know, we understand that, you know, and people are empowered to deviate from it, if that’s what needs to be done in order to, you know, keep people safe, are important. This is where a lot of the "Just Culture" stuff comes, comes in. And

so this is also where, uh, a lot of the user experience stuff comes in where, like, in order to empower, you know, in order to make it possible for people to, you know, adapt in these situations, they, we need to give them the information they need,

you know, and the training they need, we need to give them, you know, the training to, uh, develop a mental model of what’s going on. And we need to give them the information in the moment that allows their mental model to be in the same state as the, you know, model on the ground.

This is why if you read airplane accident reports, you’re ever in a plane that has any kind of trouble whatsoever, you really hope that your pilot, uh, is also a glider pilot on the side. And I think that that’s because they just know how unpowered airframes work. Like they have that experience of unpowered airframes and they have that mental model

Deirdre: And then you can just try as much as possible to just coast and glide until you either troubleshoot the problem or find a water

Kevin: exactly. Yeah, yeah, yeah.

Deirdre: This kind of dovetails with like, you know, blameless postmortems are able to, to use things like checklists and sort of like the models that you have as tools.

Like does any of this sort of, uh, approach kind of help? To better understand what we’re building as we’re building it. And what I mean is like what it’s actually gonna do when we actually run it. Because for some of these complex systems with these distributed systems with like feedback loops and just a lot of complexity, like it’s really hard for humans, let alone a single human to kind of fit it all in their brain and reason about it all and then like be like, oh yes, it will behave like so, and I under, like capital-U understand it of like how it works and what it’s doing, it’s like,

that seems really hard to me, like is there anything that we could take with this to like help us get closer to like the truth of like what it is that we’re building before we just say, here you go,

Kevin: Yeah. Well, I think that some of what this teaches us is a little bit of humility that like,

not just in the limit, but like in the 80% case, it’s impossible to fully understand what it is that we’re building. And so we have to take a more like almost scientific approach to it than like— we have to that’s, and one of the things that we do really well in software that we were talking about earlier, iteration, you know, this idea of minimum viable products, this idea of agile actually all comes out of like many of the same places and is great for that because we just like make a little thing. Put it out in the world and we see how it responds to the world.

We see how the world responds to it, and then we’re able to make, uh, decisions based off actual feedback rather than our sort of like expected or hoped for feedback. And it also teaches us, like when we encounter a very complex system that has evolved over the course of years and years to like not go in and immediately start like, you know, just like, pressing all the buttons and, you know, turning all the knobs and levers and like, you know, firing people and you know, like removing components and like whatever, like, because the current, you know, state of the system has a lot of, you know, the organization or organism’s entire history encoded in it, it, you know,

Deirdre: Yeah.

Yeah. Okay. So before we pivot directly to like complaining with a, a software safety person about what is happening to the institutional knowledge embedded into this distributed system, does anyone else have questions before I just go into it?

David: Yeah, I mean, I, I guess like actionably, what does any of this actually mean though? Right. Cause like, like I said, just about everybody already does design docs to some form. Everybody already does blameless postmortems. Maybe not everybody, but like plenty of well known software you use has that culture and it’s still buggy or it still has security problems and that happens anyway and like actionably,

what does any of this do for me as someone that’s like designing a system or designing a, or is a product manager or is an engineering manager that just like, you know, iteration in time and lessons learned. Short of saying, you know, like the field will look very different in 30 years, similar to how the field looked very different 30 years ago.

Kevin: That’s a very good.

David: What, what do I

Kevin: do?

And that’s one of the things that I’m sort of working to try to change, like the both the work in my sort of like consulting group as well as just like something that I’ve been on about in my personal life is est, having followed me on Twitter, where I talk about this constantly is just like trying to like bring this to people,

But so we can do better design docs, I think is the big idea and I mean better in a very specific way of trying to identify these feedback loops ahead of time and then understand sort of what invariants need to be true about the whole system in order for it to be safe. Um, there’s, we developed a threat modeling framework, uh, at Akamai that I wrote up through Stripe’s Increment magazine a couple years ago, which is very much inspired by this, and it’s incredibly lightweight.

It was, we got to it because we were reading like, you know, two 40 page design docs a week, and we had an hour per, that we needed to sit down and like come up with something actionable that we could take to, you know, the architecture board where the gray beards at the company reviewed the things that we were building and trying to launch and you know, tried to anticipate all the potential problems.

Like the security team needed to have something useful to say there. And we couldn’t go through the whole like Nancy Leveson-like design review process cause that takes six months. So we had a very lightweight thing where we just sat down and, you know, drew the system diagram identified, you know, sort of what, you know, the systems involved were, the principles,

uh, what potential bad things could happen either by somebody malicious doing a thing or, you know, Murphy of Murphy’s Law, um, who’s also an adversary, and then what invariants needed to be true or what, what invariants we could encourage the company to try to put into place to make the system safe. And we found that very powerful.

And I’ve been doing that now in my, with a bunch of different clients, uh, and finding that very powerful. And then on the sort of like incident review side, blamelessness is the start, but then, sitting down and trying to identify, okay, what, you know, what things intersected here that we didn’t understand?

What can we learn about the feedback of the system? How can we, uh, improve our mental model of the system and how can we change the system? You know, as a result, the learning from incidents, people are all over this now. That’s the sort of community John Allspa w founded.

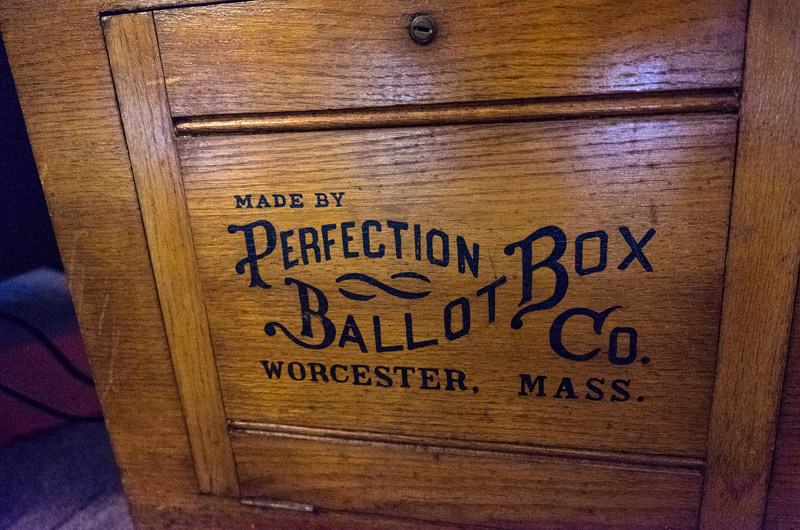

Deirdre: This reminds me of how in voting we, I’m not gonna talk about electronic voting. How it used to be that we would only do a recount or some sort of audit if something happened, if something bad

Kevin: I it.

Deirdre: And now a lot of the people who run elections are doing risk limiting audits, just as a matter of course. You look at a specifically statistically, relevant number of ballots, you don’t look at all the ballots, to make sure that they seem to be in, in accord with what you expect the distribution to be, whatever that looks

Kevin: Right.

Deirdre: Interesting. Ron Rivest has been doing a lot of stuff with ballots and audits in, uh, his later career, which is, it is kind of cool to see. A famous RSA cryptographer.

David: That’s uh, uh, kind of an odd example to me though, right? Like Kevin’s kind of making this argument for like a generic way of kind of thinking about this stuff. But a risk limiting audit is an example of taking domain knowledge and iteration and solving a specific problem in a specific field. Like risk limiting audits don’t exist in statistics outside of elections.

They’re a specific type of statistics for elections. Right. And so what’s the, I guess what I’m kind of not seeing is the value in tackling like some of these things in this generic way rather than like iteration and domain

Deirdre: expertise.

Oh, I do want iteration and domain expertise. Well, I was trying to try to pull back with the risk of limiting all this is now we do them just because, not because something bad happened. I wonder if we can do a post-incident analysis and blameless postmortem as a matter of course, not necessarily when something bad happens, and I wonder if we can like, make it leaner, make it like a repeatable thing.

What would that look like? How do you do a blameless postmortem if nothing has happened? It almost seems like, uh, you know, you do, you do. Yeah.

Kevin: I’ve been part of some of

David: done some of these in

Kevin: They’re

Deirdre: yeah. Yeah. It reminds me of doing, if you’re doing agile and you’re doing sprints, you do your planning, and then you do your, after your sprint, what went well, what didn’t go well, blah, blah, blah, blah.

But instead of doing, doing that sort of thing. You do like a quote, blameless postmortem of something, and you try to do this actively, repeatedly as opposed to like, something bad happened. Now we shall learn more about our, uh, feedback loops and we shall learn more about invariants that we didn’t think about that are required to make the system behave correctly, uh, or

Kevin: I think doing incident reviews of successful launches is a great idea. Like there’s absolutely no reason that, you know, a blameless postmortem of a successful launch couldn’t teach us some things. Uh, if we approached it with the same sort of eyes. And to your point, David, I think, you know, a risk limiting audit is an example of the tool, but the reason that we do them is I think very motivated by these systemic concerns.

It’s like, you know what invariant must be true in order for the voting system to be safe. And one of the answers is we should just be auditing it all the time.

Deirdre: Yeah. Cool. Um, I’m taking notes and like, you’re literally basically like, you know, we need better design docs. Now I’m thinking about all the design docs that, that like I’ve been part of for the past like couple of years and like, Ooh, we didn’t have this, we didn’t have that . We had, we had some quote feedback loops, but we did not— yeah. So,

Kevin: to be longer. That’s the beautiful thing. Like a lot of design docs already have a system diagram in and then you drop, you know, a couple paragraphs in on this stuff. Uh, whether from a security perspective or a, you know, resiliency perspective or just a, like making sure that this thing does the thing that we wanted to do, like a meeting our goals perspective.

Yeah. It’s incredibly powerful and it’s been a lot of fun to bring to people and see, have people like, oh, I get it, and then start to play with it.

Deirdre: this is cool. Uh, do you think Elon Musk likes postmortem analysis

Kevin: Oh God Postmortem analysis of the Twitter acquisition. Yeah. I don’t know where to start on that one. Um, and we’re trying not to be, we’re trying to be blameless about it, so we can’t go blaming Elon Musk for this, but yeah. What systemic factors resulted in this, um, well, Elon Musk. Capitalism. Yes, exactly.

The, some of the Twitter friends, uh, and I who are like deep into this have an ongoing bit about how like, original sin is the, uh, ultimate root cause of everything. Um, like we, all of our root cause analysis should just say that and be done. Or maybe the Big Bang, you know, uh, the, yes,

David: I think in, in some ways, large companies with product market fit are basically impossible to kill.

Deirdre: Mm.

Kevin: That’s my hope.

David: you see, I don’t know Steve Yegge, Yagi, I don’t remember his Um, Yegge, right? He has a podcast. And I remember, he has an episode that’s about like how a bad executive can tank your company. And that’s totally true, at smaller companies.

But then one of the ex examples that he gives is how a bad executive basically tanked whatever Xbox came after the Xbox 360, which is true that one, the was a disaster relatively in the market. But if you look at like what’s happening now, he got booted out eventually. Phil Knight’s kicking ass in that department and that’s still going along.

Deirdre: Hmm.

David: Uh, once you get to a certain point, especially in a business with network effects, like if the money is still coming in and there hasn’t been a, like fundamental change in the industry ala like AOL like it’s very, very difficult, um, to kill a large company. And I wonder if there’s something that we can learn, I guess learn from capitalism, um, under this, this lens of resiliency

Kevin: I mean, I think what you point out there is that there are sort of like second and third order feedback loops, which can step in in an example where like a company has a bad product launch and it doesn’t go well and it’s mismanaged, uh, to sort of correct that, you know? And so, you know, like all organizations, like all organisms want to maintain homeostasis, uh, which is just about, you know, keeping all the parameters necessary for survival within the, you know, bounds within which survival is possible.

And so yeah, that’s a very prescient point. And I, you know, in fact if Twitter’s new board steps in and is like, alright Elon, this is clearly not going well, you know, there’s definitely a possibility to rescue something? Maybe? Like now, uh, that’s a lot easier when, you know a company has a bad launch and sells like 20% fewer Xboxes than expected, than when a company fires half its staff, forces another quarter, third, more? To quit.

Deirdre: that. Yeah.

Kevin: And also like has advertisers, you know, running for the hills because the CEO can’t stop shit posting. Um, So like, that’s the kind of thing where I think, you know, the people who are predicting Twitter is imminent demise are, uh, pretty, uh, unlikely to be Yeah. But like, you know, the, uh, system, you know, complex systems can truck along for, you know, far longer than anyone would ever expect them to be able to, uh, under pure inertia and, you know, the existing things, and then, fall apart almost immediate— you know, almost overnight. Like, and so that’s sort of, I’m like, this is gonna go on for, you know, weeks and weeks, you know, maybe you know more. And then they’re going to have a two month outage.

Deirdre: I, I was originally under the same sort of impression, but the World Cup starts on

Kevin: Yes. That is a very, like, that is the kind of thing which could totally just like, yeah, if there’s nobody, and so this is the thing that we are talking about on Twitter. Uh, where I was like, you know, when we would run live events at Akamai, they, you know, uh, well we, we were struggling. But to the extent that they went well, it was a lot because like somebody remembered that the last Super Bowl we had to like over provision in these geographies and like put the queues into this state and remembered all the knobs and levers to turn to be ready for Super Bowl Sunday.

And if those people aren’t there for World Cup, then.

Deirdre: Yeah, and it’s, it sort of goes into what you were describing earlier about like, I think a lot of us are very technically focused about the software does this and the queue does that, and the network does that, and we have a database and then this and then that and the other thing. But secretly, these are like a lot of these complex systems, especially something like Twitter scale, are human-software systems.

There’s a lot people.

Yeah, like institutional knowledge is walking out the door or getting kicked out the door or getting like, you know, fired on Twitter or whatever, and it’s like, yeah, those people and the things that they remember in their brain, because not every single thing gets written down by every single person, uh, and is, or is easily, easily findable by people

Kevin: especially in the middle of an incident.

Deirdre: Yeah. Like because one, either your Confluence is 16 years old and it’s hard to find anything, or you’re in the middle of an incident and you don’t have time to do the most perfect query. And so there’s all this knowledge about how to make the system go that is just in someone’s brain that’s been there for 12 years or 16 years, and then they get fired and now you’re just like, oh no, we’re gonna have our highest uh, traffic event of every four-ish years.

Kevin: Oh yeah.

Deirdre: and, and like 75% of the people who know how to make the things go normally and have the experience of making them go on the highest traffic event are gone.

Kevin: Yeah, have fun. We say that humans are the adaptive part of any complex system, and a lot of the adaptive capacity of Twitter got ejected at high speed. And yeah, I do not.

Oh my God, I, so like when I would run incidents at Akamai, like I would often get called in cuz I was on the, on the, both on the InfoSec side and also just sort of on the general like, uh, Akamai-wide on call thing to, I would often get called into incidents, uh, for systems that I hadn’t really heard of before or interacted with all that much. So the role that I played as an incident manager was much more about just like helping people talk to each other and getting the right people in the room. And so the fact that I worked in InfoSec and so I had had a little bit of context on like a lot of people at the company meant that I could see a thing, you know, one engineering team is struggling with a thing, I’m like, oh wait a minute. Uh, you want to talk to so and so.

And so basically being a human like switchboard or human rolodex and using Akamai’s really nice company directory to be like, okay, we need to get somebody from so and so team in NOC. Please, you know, please start calling down the, you know, the nameserver tree.

Deirdre: You’re almost like, like a, like an Akamai Clippy sort of like, "it looks like you’re trying to triage this like distributed systems incident. Do you want to talk to so and so?"

Kevin: Yeah, yeah, yeah. And so, oh my God, I would, you could not pay me to manage the incidents that Twitter is going to have because it’s impossible. There’s nobody there to, there’s nobody there to pick up the phone, like, you’re like, I need to talk with somebody on the name service team. They’re gone.

Deirdre: just tum— tumbleweeds. They’re, yeah.

Kevin: in a howling wind like, yeah. Oh my god. Not only will the incident manager not know the systems involved, none of the other people on the incident will know either because they got given this thing last week on top of the other 50 things that they got given and they haven’t even had time.

You know? Uh, yeah.

Deirdre: Plus also they like haven’t been locking out all the people that they’ve fired correctly, so they still have access to lots of stuff,

Kevin: Yay.

Deirdre: they’ve got

Kevin: on the other hand, that does mean that if you need somebody in an incident, uh, you know, the, the, the story, this might be the story, like that story about the Apple engineer who launched the graphing calculator. He got fired and he just kept showing up to work and nobody noticed. Uh, and they included it in the, and so like if there is a Twitter success story, like whether it’s Elon Musk’s Twitter, or the board steps in and is like, dude, we, we, you’re doing the Denethor thing. You are sitting on 40 billion and you are like, bring me wood. Bring me oil.

Deirdre: BRING WOOD AND OIL.

Kevin: Go out like the pagan kings of old. I’m like,

Deirdre: Oh

Kevin: if his steps in and, and like

David: on the flip side, Gondor did

Kevin: Gondor did survive. Yes. However, Denethor did not

Deirdre: No

David: I, I, I think a per a maybe, uh, uh, more prescient antidote, not antidote, anecdote. Um, Taylor Swift.

Um, so there was the ticket sale for the latest tour several days. And they did a verified fan thing to try and, you know, make sure that people who actually wanted tickets were buying them, or that you were at least a person.

And I think that part went reasonably well. Not everybody got through the queue though, but then when the actual ticket sale came, Ticketmaster had a whole host of traffic issues and a lot of people didn’t get tickets. Or it took longer than expected and everything got pushed back.

I saw in a statement pushed out by, uh, Taylor herself today that at the previous tour, um, her team ran the sales. And not that that tour went out without a slide. I think there is like a rush and lots of scalpers still. I don’t think they did the verified fan thing, but I believe they handled the traffic load.

Deirdre: Okay.

David: supposedly her team, whatever this team is, I guess she has a crack team of software engineers, told Ticketmaster, these are the traffic levels that you should be.

Cause they had that institutional memory, like you mentioned about the World Cup earlier and Ticketmaster was like, yeah, totally. We got this. And then they clearly did not, because like everything got pushed back. They could not handle the load, all this stuff. But the tour still happening. Lots of people still got tickets.

Lots of verified fans still got tickets. Several people I know personally got tickets. I got tickets actually. And so, you know, obviously it ended well for me, but like, it’s not like Ticketmasters going under, it’s not like this tour is crashing. Um, and so maybe there will be downtime there. There definitely will be downtime.

But like, does that mean the company’s gonna go under?

Kevin: Then still my take on that is we had, I don’t know if Akamai is doing delivery for Ticketmaster or not. Uh,

Deirdre: Oh,

Kevin: uh, DNS that if I want later, but I would not blame them for that either. But, uh, you know, something like that is sort of a garden variety bad day and, You know, we saw a dozen of those in my time in Akamai and like people weren’t happy, the customer was unhappy, but like, and maybe they moved to a different CDN or whatever.

They discovered how hard it is to build their own CDN, you know, whatever. But I do think that what we are starting to see at Twitter is cutting into hardwood in a way that I’m very concerned by. Yeah, we’ll see. I, and I also think that like if the service continues to run and if it got handed off to somebody like, tomorrow, it would be an enormous crisis for the organization.

And the next several years would really, really suck to be Twitter engineer. But they would be able to save the service and get the advertisers back and right the ship. And the question is really, can that second order feedback loops step in before the thing just goes kablooie,

Kalu,

Deirdre: It’s like unrecoverable. Yeah. Because part of running a social network or a, a service that relies on people to run it, like the value of it, which is content, and then you can show ads on the content or, you know, whatever, or pay for, you know, make people pay for access to the content is like, you need people to be there because other people are there.

And if they don’t want to be there, they will leave. And then they will not come back because the people aren’t there. And sometimes that is like an unrecoverable thing, even if you do everything right technically.

Kevin: I mean, LiveJournal had product market fit, but never recovered from, uh, at least in the US from the, uh, acquisition. There’s, and you know, Tumblr, uh, recovered from the Yahoo acquisition, but it doesn’t have anything like the place that it did. And so I think that at least at the moment, like because it’s been so, so new, like Twitter would retain its place as sort of the consensus narrative of Internet connected, like global society.

But if Twitter was down for two months, would it? I don’t know.

Deirdre: yeah, yeah. Or like effectively unusable, which kind of ties back to like what we’ve been talking about, like the invariants, you need to make it like safely running, functionally running operational, like twitter.com might be up. Some tweets might post, some tweets might show up, but like if you like try to just use Twitter and it’s a fucking pain in the ass.

Or like people are gone and like it’s unreliable and like, you

Kevin: Or it’s full of

Nazis. Yeah.

Deirdre: or it’s full of Nazis or you know, all this terrible language that used to be much more common on it. And they did a lot of work in the past few years to make it, mu— a nicer place to be. That doesn’t count as like Twitter being operational anymore.

People just leave

Kevin: Yeah. Yeah.

Deirdre: yay.

Kevin: Oh man. Much love to our friends who, uh, were at Twitter and are no longer at Twitter.

Deirdre: Yeah,

Really fucking sucks. It sounded like a nice place to work and I really liked the service that I used it a

Kevin: I use lot

Deirdre: use it. I will probably— my Tweetin’ fingers will still be going and like for a long while and I’ll have to retrain them.

David: I think it was Sarah Jeong that said, uh, I love things that suck, like I’m staying here for the long. Right. That’s exactly how

Kevin: I mean the, like the,

David: I played Mass Effect: Andromeda all the way through. Her and me. We’re staying on the

Kevin: ship

The Viking Funeral slash Irish wake for… yeah, has been pretty good and, yeah, I’ve been both genuinely enjoying getting to see like, you know, tweeps talking about the work that they did and talking about how much they liked the people they worked with, and people who use the service talking about like the things that we loved about it after so much, you know, of it getting blamed for the destruction of democracy, people like, oh, this hellsite, like it. We really actually like it. You know, it’s easy to over index on the bad things, you know, but what, what, right? What’s the safety two here thing?

Well, we all have made friends and more on the site and it’s been really meaningful

Deirdre: Hell site is a hell

David: how we find podcast guests.

Kevin: Exactly. Yes. Yes.

Deirdre: no joke.

David: So, you know, on that note, I think

Deirdre: mm-hmm.

David: thank you very much for coming

Kevin: you, David.

Deirdre: Thank you

David: Um, Thomas also thanks you,

Kevin: thank you.

Thomas: I’m here. I.

Deirdre: I know we,

Kevin: I can hear you. Yay.

David: Any closing remarks, Thomas? Okay.

Thomas: This is the worst podcast ever.

Deirdre: It’s

Thomas: Thank you very much,

Kevin.

Kevin: Thank you, Tom. Yeah, thank you

Deirdre: thank you

Kevin: Yeah, it’s been a lot. Security, cryptography, whatever is a side project from Deirdre Connolly, Thomas Ptaçek and David Adrian. Our editor is Netty Smith. You can find the podcast on Twitter @scwpod, and the hosts on Twitter @durumcrustulum @tqbf and @davidcadrian. You can buy merchandise at merch.securitycryptographywhatever.com.

David: Thank you for listening.