A lot of fixes got pushed in the past week! Please apply your updates! Apple, Chrome, Matrix, Azure, and more nonsense.

I have fucking six browsers running on this phone. It’s great. And not all of them are based on Chromium.

This rough transcript has not been edited and may have errors.

Deirdre: Hello, welcome to Security Cryptography Whatever. I am Deirdre.

David: I’m David.

Thomas: I’m Thomas.

Deirdre: I’m a cryptographic engineer at the Zcash Foundation.

David: I don’t know, what am I, I did some stuff once. I’m a has-been at this point,

Deirdre: Ah,

David: I’m the type of person where someone calls me up because they’re in school and they’re like, my professor told me to call you about this thing you did eight years ago.

Deirdre: You have a legacy.

Thomas: I’m a punching bag at fly.io

Deirdre: Aw.

Today, we do not have a guest. We never intended to have guests. We just ended up having some amazing people who we wanted to talk to and let us talk to them on our podcast. So today is a bit of a roundup of, circling back on some of the episodes we’ve done before, and also just sort of a news roundup of security, things that are both catching on fire and making us happy.

let’s see, pertaining to our most, oh God, not most recent. One of our more recent episodes with the inimitable Ryan Sleevi, DNSSEC—

David: I’d like to think of it as ‘the inevitable’ Ryan Sleevi. He’s like Thanos

Deirdre: enough. "I am inevitable." Yeah, the inevitable Ryan Sleevi. we didn’t talk enough about DNSSEC.

Thomas: yeah, like if you missed it, it’s my favorite thing that we’ve done so far. Right. So Ryan Sleevi runs the, uh, the CA root program for Google. So basically Ryan is one of the people that controls, you know, who’s allowed to have trusted certificates in your browsers. it was a good conversation.

He told us all how to, you know, be our own certificate authorities, which any day now, we, we will all be. Um, and at the end of the conversation, I sort of mentioned to Ryan that we’re probably at a point where we can, kind of stick a fork in DNSSEC. Declare it dead. We had a whole interesting conversation about like the ecosystem of how they, you know, do CA trust and things like that.

And, uh, w Ryan like shoved an ice pick through my heart and said that, uh, the stuff that European certificate, you know, the European governments are doing with like, you know, government mandated certificate authorities is probably going to mean that they’re going to have to consider doing something with DNSSEC, and DANE in particular, and DANE is the thing where you use DNSSEC as a certificate authority. and he seems to believe that it’s happening. But, um, yeah, so that’s the thing that happened, that I’m a little bit speechless about it. So, David, I think, uh,

Deirdre: Still reeling

Thomas: you have, the take here. I think

David: well, I think I should be able to make you a little happier, because I think there’s just so, so much that needs to happen kind of organizationally, from a tactic— well, that needs to happen in terms of like technology policy, for DNSSEC, that has been happening with HTTPS and certificate authorities that just simply has not happened with the registrars.

So really, if you think about, the HTTPS and web PKI, and then the kind of base level of validation, which is this domain validation where you prove that you own a domain by setting a DNS record or serving a special file, and then you get a certificate. a kind of straw man description of it, is that it’s a very convoluted system for basically delegating trust on first use to a CA. Like there’s not, since there’s not like actual sort of corporate person-valid, like no one is coming to your house and showing that you actually own the domain and you are an authorized representative of the person that claims they own that domain.

Right. and for good reason, like, I don’t think we would be in a better world where that was happening. we’re really building a system where whoever’s kind of had the domain the longest, um, gets to have certificates for it without it looking weird. And the reason for that is certificate transparency, right?

Is that when you get another— when a CA issues another certificate for your domain, for it to be trusted in browsers, it needs to be added to the CA log— to the certificate transparency logs. And if it doesn’t get added to the log, then it won’t be trusted, which has the side effect of, you know, if it gets mis-issued, because someone hijacked your DNS, it should eventually end up in the log.

and so in that sense, like you’re just getting the same certificate that everyone else is getting.

Deirdre: Yeah. And you can check. Then that’s the whole point of the certif— of the transparency, which is, you know, given some qualms to some people who like, well, I want my certificate to be trusted, but they don’t want to advertise like a product that is not yet been launched, but I want to get my ducks in a row and have my certificate be trusted by Chrome or anyone else that requires that you have, uh, like, uh, a ticket, whatever they call it, an SCT in every, every time that you’re negotiating, your TLS connection, without leaking the fact that they have, you know, my new product.com in the certificate transparency log.

And I think there’s, there’s some work arounds for that now or something like that,

David: um, yeah, there is, there is some form of, uh, gosh, I forgot what they called it like blinding, but I think that might’ve been gotten rid of, um, or it’s only in the next version of CT, RFC

Deirdre: Oh,

David: which just also doesn’t really exist. Like, like for whatever reason, version two was standardized, like are mostly standardized a while ago.

And then like everyone just stuck with version one. Someone who pays attention to web PKI more can probably correct me on that. But the, the point that I’m going with is that like, especially once you introduce gossip to really verify that like the SCTs you’re seeing are the same as everyone else’s or that like, cause SCT’s are really just a promise that has got added to the log.

It might not have been added to the log. So there a convoluted scenario where someone issues a— you work with the CA to issue an SCT and then never actually added it to the log, but the browsers are supposed to check what they’re going to actually be in the log eventually. And you can talk to other people.

So basically it’s fairly difficult to issue a certificate for a domain and like actually not have anybody notice,

Deirdre: um,

David: nowadays.

Deirdre: Nowadays.

David: Like, it’s basically impossible to do that with DNS because the whole— half the value proposition of DNS is that you can serve different IPs to different people. Like this is, this is how DNS load balancing works.

Deirdre: yep. this is how you can get region, not load balancing, but like we want people, if they call up like a google.com and they’re located in say Turkey, we can just be like, maybe we’ll redirect you. Well, maybe we’ll suggest to you, uh, like Google dot, you know, .tk I don’t know what the TLD for Turkey is, but the.

And then like redirect when like anyone who hits Google dot Turkey can go to that whole infrastructure that is localized for them, has language for them has, you know, all the infrastructure to serve them Rapido as opposed to going around the world. And DNS is a big part of that.

David: Exactly. And so there’s this whole system for detecting, like for basically visibility into web PKI, that just doesn’t exist for registrars, to then say nothing of like the organizational audit and policy requirements, like the BRs that Sleevi was talking about. I mean, there certainly are rules to be a registrar, but I not under the impression that they’re like the same kinds of rules that would apply to a CA.

And so I just think there’s a whole— when people talk about switching to DNSSEC, there’s just like this whole host of improvements in web PKI over the last 10 years that are just non-existent in the DNSSEC world. And that’s before you even get to talking about why are they using like weird old crypto?

Like I’m fine even hand-waving and saying like, let’s assume all the crypto gets better. let’s assume somehow the like, clients get updated: finagling registrars to behave with a security posture, at least as good as certificate authorities do now. Or at least the good ones, like are just seems totally out of reach for a very long time

without without a

Deirdre: Is there, you’re saying we need DNSSEC-CT. we need DNSSEC-transparent. We need more. We need blockchain for DNSSEC, that’s what, that’s all fucking Merkle trees all the

way

David: down.

Well, the thing there though is like, you don’t necessarily want to serve the same thing to everybody in DNS, right. what we were just talking about. So I don’t even know that that’s feasible.

So I don’t see DNSSEC going anywhere until you solve some of those things. And I think that no, one’s really talking about that.

Thomas: I feel like I might be the Internet’s foremost, noisy hater of DNSSEC. it is like, it is like my calling card on the internet is disliking DNSSEC. I have a long like catalog of problems I have with it. I think a thing to pay attention to here is that, and maybe I’m just trolling Ryan Sleevi into correcting me, Twitter, but a thing to pay attention to here is that Google has market power, and kind of institutional power versus the certificate authorities, right? Like we— Ryan Sleevi doesn’t, but we generally cast the certificate authorities, except for Let’s Encrypt, the certificate authorities as the bad guys in the internet trust, you know, kind of story, right?

Like just they’re they make money selling peoples— yeah. They make money selling people certificates. They don’t make money making certificates safer. And, you know, the perception is that they’re generally an obstacle to getting, you know, better internet trust deployed. I think that’s probably mostly true,

Deirdre: Mmm

Thomas: Google can kill a CA right? Google has killed a CA. Google has killed some of the largest CAs that there are, right, for policy violations. and there’s like there’s decades of history built into how Google gets to do that. not just Google, like Apple could do it. Um, Mozilla could do it.

Microsoft could do it right, there’s like there’s decades of history into how that works and what the institutional power they have is with respect to policies at the certificate authorities. I don’t know, I don’t believe that that exists, not just like the functionality that David is talking about, but like institutionally the ability to push that stuff to the registrars.

Right? Like for me, it always comes down to: Google can kill a certificate authority and everyone will scramble to a new certificate authority to get out of the, you know, the blast radius of Google killing, you know, Verisign or whatever it is they killed. Right. and that, and that works cause it’s pretty straightforward to switch certificate authorities.

Right? You can’t switch out of .com, right? if .com is misissuing or not following policy or being an obstacle to deploy. And you know, if Google wants to declare, okay, there’s going to be no 1024 bit RSA anywhere in the DNSSEC hierarchy. Um, or, or we won’t, you know, we won’t honor signatures for, you know, this, this domain or whatever, right?

Like you can’t jump to .com to a different .com. That doesn’t exist. Right. And like, I, I feel like. There’s an answer to this where it’s like, I think Ryan Sleevi, you said this, right? Like, well, this is a reason to be careful about what domains you’re on. Right. But the reality is that Google in particular is locked to some, you know, to specific domains that they have to be on.

Right. And if those domains and the people that are managing them, aren’t behaving, there’s no recourse, right? Like, the registrars could very well win where the certificate authorities lost this battle before, is like one of my many concerns I have with that, with DNSSEC.

Deirdre: that’s funny. Cause I didn’t even think about that, but like you can take, you can take your google.com and you can go, uh, certificate authority shopping and you just keep google.com forever and like, whatever, but you can’t shop around for different D you can’t register it again and again, you register once.

David: I think the counter argument would just— would be that like you would see basically what happened to Symantec kind of happened to.com cause DigiCert bought the Symantec certificate everything. as it was getting shut down and basically just it’s like, Hey, Symantec customers, guess what? Um, not only can you not use it anymore, but also we have it, let me tell you about my good friend DigiCert slash Stratego.

Right. Um, and so there’s, the counter argument might be just be that like, yeah, you can’t get rid of .com, but you could transfer whoever is controlling .com to someone else, or controlling that aspect of it. That would be the only feasible way to work around something like this, that I could think of.

Thomas: Well also like Google is only now getting control over the certificate, you know, the certificate stores themselves. Like Chrome is taking control of what certificate stores there are. But up till pretty recently, they did not. They relied on the operating systems to do that for them,

David: yes, but they

still had, they still had

Thomas: so with the.

David: um, or rules on top of that. Like you could do somewhat weak chaining with the operating system. And then on top of that, like enforce additional constraints. Like for example, when Heartbleed happened, they manually inserted some code that checked for the CloudFlare SPKI that they revoked for, for like all of the CloudFlare cert— they just stopped using one of their certs, that corresponded to all of the CloudFlare certs that were hardly compromised. and so there’s, there’s other checks like that. That’s how they enforced some of the distrusts. I mean, that’s how they enforce the distrust of Symantec even like, cause I don’t, I don’t think their root store is shipping or at least it’s not shipping everywhere.

So you end up with extra code, like within the TLS stack, that just looks at the result of, of the operating system verification and enforce additional constraints. You’d still wait better to do the verification yourself because there’s so many, different ways to chain a certificate in the common case that like, you kind of want to know all of them before you make the decision.

Whereas many APIs will just give you back one of them and there might be another one. And if you don’t like the one I gave you back, like your SOL, so, there is space to do before you do policy before you’ve been controlled a root store. And then in fact it’s what Google’s been doing for years. I don’t think that detracts from your point at all, though.

Thomas: Yeah, the only other thing I have to say about DNSSEC is that I have many, many more arguments besides this argument.

David: and got to go down to your argument vending machine and

Deirdre: Tom is the only reason DNSSEC hasn’t already moved to curves is that, I think Sleevi told a story of like, they tried to deploy like minimum DNSSEC, and it broke some VP of, of Google’s like networking heart, hardware that they had deployed in their house that like hadn’t been touched in like 20 years.

And they had to deploy engineers to figure out why they broke the VPs network. And it was because DNSSEC, because the old DNS can’t handle like 500 bits of RSA in the thing? Is that—

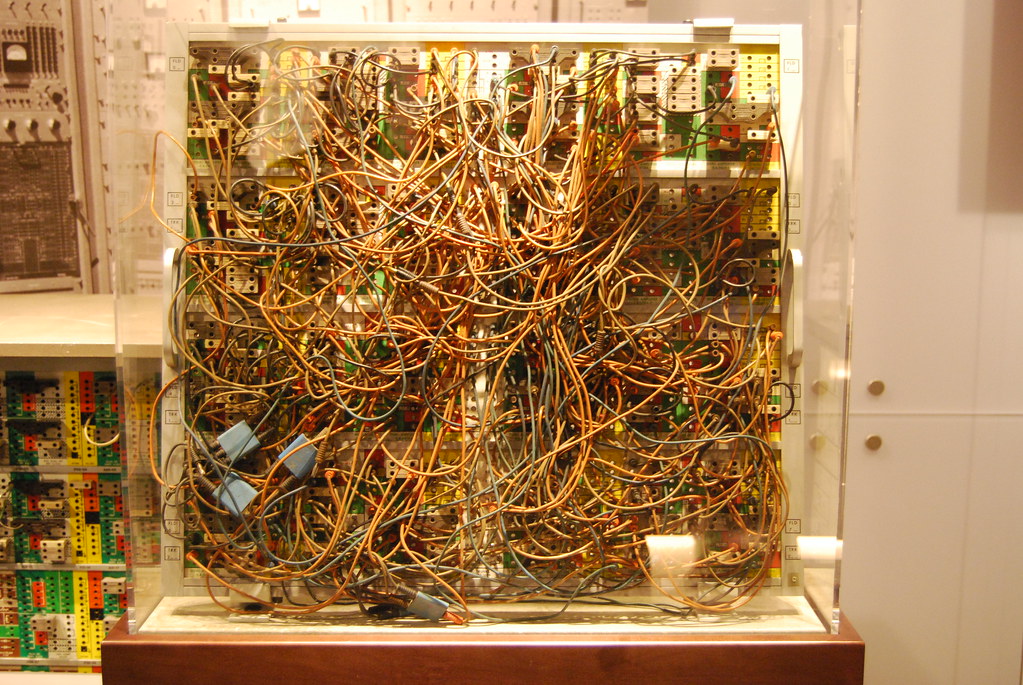

Thomas: this is like, this is such a can of worms, right? So, um, when, when you think about the web PKI, there are a lot of code bases and a lot of installations that make the web PKI actually work, but it’s a, like a relatively constrained number of installations that there are, right. For the DNSSEC hierarchy by design DNSSEC is, it’s like it’s 1980s-distributed.

It’s not distributed in the sense that we would mean today, but it’s, you know, so the authority over DNS records is, is distributed over thousands and thousands of different organizations. Everybody who owns a domain, in a sense has control over how that domain is administered and what records that’s going to support and what software it supports.

Right. but that also sort of goes for the DNS at client sides, the, you know, the things that actually like validate DNSSEC records. So like, for, I think like 10 years now, I think it’s Jeff Houston. I think he’s at Ripe. I forget where he’s at. I should know this. but they’ve been doing studies on DNSSEC compatibility, and for a long time, curve DNS was breaking things, not like breaking home routers like, DNSSEC like by itself breaks home routers, right? Like at any, any DNS query that does that doesn’t look like a DNS query that would have been issued in 1995, will break a home router, um, which is why Google tried and then pulled out DANE.

Right. But, um, Even in like real DNSSEC implementations where you could actually speak DNSSEC to them, curves for a long time, broke things anyways. Right? Like, and you can kind of imagine, right. People deploy this DNSSEC software, that’s kind of built with the assumption that everything is going to be RSA and they don’t curve support, and curves were exotic when this code was written or whatever happens there.

Right. But that, the thing I think to remember there is that like certificate authorities can upgrade, you know, and comply with, you know, new things pretty quickly. Right. But getting a huge install base of DNSSEC servers updated is a nightmare problem. So need like ITI to fix all of those incompatibilities and also get everyone to issue with the new kinds of, you know, with the curve records instead of the RSA signature records.

Right. All that has to get deployed. Also, um, Obvious when you think about it is you don’t just have to get people issuing, curve-based signatures. You also have to get everyone to stop issuing RSA based signatures. Otherwise you’re just getting, you know, you’re just asking for whatever the lower security of the two of those things is.

I don’t know how long it would take to cut the entire internet over to curve-based, you know, to curve-based signatures.

David: mean, it’s not even on that on web PKI, like you might do occur. A lot of my TLS connections are doing curve based key exchange, but the signatures are still, or the signatures in the certs are almost always still

Deirdre: It’s it’s really sad. And like I was giving someone crap like, oh man, when do we get curve25519-based certificates, and they’re like, yo, we’re not even on like NIST curves certificates. Like, we’re not I don’t know what the numbers are now, but at the time it was like vast majority are RSA-based certificates, which is just like me,

David: I once started a company that literally calculates this number on a day-by-day basis. So let me go check, keep going.

Thomas: Yeah. I think as you guys are probably realizing I can go on and on about this topic, I keep giving you opportunities to get off this topic and you follow up with more DNSSEC questions.

So

Deirdre: Because we can, we can rabbit hole on this all day. Um, sad, sad, Panda. If we can’t even move off of non DNSSEC certificates or keys from RSA to curve based. Oh, good Lord. What hope do we have for the most fragile, like written in the late eighties, early nineties, DNS infrastructure to do it as well.

Although maybe at that point, we’ll have like the most beautiful curves to move to. Although we probably won’t, because it will, it’ll all be NIST curves and we’re going to be deploying this to P256 for forever and ever, but that’s not as bad as it used to be because we have new curve formulas and they are better now.

So that’s not So bad. that’s DNSSEC.

other news.

David: quick breakdown of currently trusted certificates. I’m seeing 592 million using RSA-SHA256, 44- 44 and a half million using ECDSA-SHA256, which I think is a NIST P-curve, is way more than I thought to be honest. A couple of confused people using SHA-384 RSAs, they must have a really weird key length or something.

Deirdre: A bigger number betterer, right.

David: I don’t even know who you get to issue those.

Deirdre: Um, maybe Google? I don’t know, well, over 90%

David: yeah. 91.42%

Deirdre: Yeah. And we’ve had these, we’ve had curve based certs for over 25 years, 20 years? Something like that. Anyway. thank you, for doing a startup., that Could give us this information on the fly. It’s extremely handy.

Other news. iPhones are on fire.

iOS is on fire. All right. we’ll start with— there was a story in the MIT technology review about how a US company, Accuvant, sold exploits in iOS to the United Arab Emirates, and then United Arab Emirates used them against, I don’t know, quote unquote terrorists, not terrorists, people they call terrorists, but we would call activists, journalists, stuff like that.

and that dovetails with, this week or the week of the 17th, Apple patching all their platforms: iOS, macOS, iPadOS, watchOS, all the OS’s, because, they were patching a zero day exploit found by the research group CitizenLab at the university of Toronto, that they called FORCEDENTRY. They found it by just kind of getting a li— I think it was, they found it because they got a list of NSO Group’s, possible targets.

And this is kind of just like, don’t, don’t ask how we got this possible target list. We just found it. And then we started contacting them to be like, do you mind if we look at your devices? And some of these are like, family members of, Saudi nationals and Saudi activists, such as, uh, people who knew, uh, Jamal Khashoggi who was murdered by, uh, Saudi Royal family. Turned out that their devices that they were using around the same time that he was murdered were full of this no click zero day, in iOS, that was, eventually patched by Apple.

After about some, say a week, some other people have said like almost 20 days. From when they sent a crash log to Apple, to when they actually released the patches this week, I think it was the 14th of September. I think they said from when they delivered a full exploit to Apple, it was about six days and, uh, related to some of our ranting about Apple security and in previous episodes, but also about, like, how do you secure platforms like this and like have a secure software program and how do you patch, and basically Apple seems to they claim that they patch this as expeditiously as possible.

And we’re basically all the information indicates that no, they released it in about their regular patch cycle. And if you cannot get out patch for your platforms in less than your normal patch cycle, for something like this, which was a no click exploit, a vulnerability in WebKit and their, like their general media parser library.

And I forget the name of it, um, so that you could have a malicious PDF that looks like a GIF, and you can just push it to someone. They don’t even have to click on it. it would get parsed. I think the vector to actually hand over this, this payload was iMessage again, that it would execute and then it would persist until reboot. If you rebooted the phone, it would wipe it and they would have to, do the expo again.

yeah. everything’s broken.

David: so the one thing that I’ll say, and then tear down, is that like, it would it’s— I can’t imagine it would be possible to do what release in faster than three days. And that’s like an insanely fast release. Probably five days, like a business week, realistically, cause that’s like a data patch, at least a day of building stuff, and then a day to actually release it as like your three days. And assuming you want to like, do like release testing and there’s, you know, add on another day or two, like that’s realistically, the fastest that I think a release like this could happen.

Deirdre: but this would qualify for

that.

David: So they hit it from six days. But I think the takeaway is that they actually had it in like 14 days.

Um, because like that trace should have been enough to do the patch. So you can kind of assume that they actually did the patch on the trace and then, you know, it took another what, 14 days or whatever it was for them to push out the release on the regular cycle. like sure. They can say that they might kind of imply they did the patch, like once they saw the exploit, but realistically they could have patched it with the back trace.

And then you’re looking at a not, particularly fast timeline. I mean, it’s not a 1990s timeline, but

Deirdre: yeah. I’m looking at the specifics, both from the patch notes and from the CitizenLab write up, which is very good. And we’ll add links to to our show notes, the actual patch notes. and, and like, I kind of was talking about this somewhere else that like, when you got a bunch of OS’s, you’ve got iOS, iPadOS, watchOS, macOS, you know, there’s another.

Somewhere, like iPod touch, you know, because of course iPod touch has a fucking browser in it. Cause everything has a fucking browser in it. yeah, you’ve got a bunch of those that you have to go through, you know, quality testing and then, before you push it out, but, they were all using the same components.

They’re all using CoreGraphics and WebKit. And these are the things that they’re sharing— like this is the pro and con of having a shared dependency. especially as someone who works on fucking cryptography with a ton of shared dependencies, because some of them are very bespoke. if you have all your eggs in one basket and you secure the fuck out of your basket, maybe you can deal with that.

But if everyone is sharing the one basket, so if there’s something wrong with the one basket, everybody gets fucked. And like the other side of it is like, you can have multiple implementations. Do the trade-off of maintaining multiple implementations, making sure they’re compatible, make sure that you’re not reproducing bugs or security vulnerabilities between them, but then, you know, maybe you won’t all get bit by the same fucking vulnerability all at the same fucking time.

so yeah, I want to kind of give them the benefit of the doubt that when you’re maintaining all these OS’s. Even if they’re sharing the same components, you have to take a few days to do a quality assurance and get it out and make sure you’re not going to break anything. But, like, if you had a trace for two weeks or more, and it took you this long and— this, like, this is the showstopper, like they did not find this. Like a third party research org found this on their own investigations and were talking about it for weeks.

And Apple only finally did something after they kind of were like, here is a full exploit chain and it took them this long to push it out. That doesn’t feel good. It feels like they cannot, by their organization and their, you know, security software deployment system, move fast when they need to. And that is a problem.

Thomas: Do we, um, like have people reversed the patch yet? Like, do we have like good intelligence about how much got fixed in that patch? Like another thing that happens, like we have the three-day thing for like, you know, identifying and the QA and then, um, you know, deploying the patch. Right. But there’s another step in like, in the common case for when vulnerabilities are reported.

There’s another step in there, which is, you go look to see what if there’s like, if it’s systemic. Um, if there are lots of other instances of that thing, which also takes time and you can imagine like there being problems with, okay, so this vulnerability is reported and like, you know, 45 minutes of scouting in the code shows you that there’s other places where that’s potentially vulnerable.

All of those need to get fixed as well, especially because the patch conclusively identifies the pattern for the flaw anyways. which is the thing I think people don’t think about that much, but as soon as that patch is, you know, released, you’re also, you’ve got a blueprint to what the vulnerability is there. So you can imagine also like taking a minute to make sure that you’re not going to have to do a string of updates, because at a certain point, people won’t update back to back over and over again. Cause updating is a pain in the ass. so I, I have no reason to believe that that’s any part of what, what happened here.

But another thing that can happen that can blow out patch timelines is, just taking a minute to see what else that vulnerability— like the people that found that vulnerability may or may not know about all the other instances of where that code pattern happens.

Deirdre: Yeah. I don’t know if it’s been tore down. I’m doing a quick grep. Oh, I’m doing, oh, there’s something on the objective see blogs. I’m going to look at that.

David: But while you’re looking at that, I will just say like, hats off to the CitizenLab people, especially Bill Marzat, he’s the first person listed first on this blog post. I don’t know if that has significance here or not. but he’s been working on basically security for activists since before I was in grad school.

So like probably 2013 or earlier. after I think some of his friends, I want to say in Bahrain or something got hacked by the government. I don’t recall specifically, but he has a bunch of papers. He has a PhD from Berkeley, and he’s just been really working on this type of stuff with CitizenLab. and I I’m pretty sure in the last, like three to five years, it’s mostly been NSO group stuff, but there’s a lot of Hacking Team stuff back in, back, I don’t know. I want to say circa-like 2017, 2018. but I think hacking team went under, maybe they got bought by NSO group.

Deirdre: I wouldn’t be surprised. They, they kind of flamed out, very publicly. So maybe they either got bought. Maybe they got gutted and bought, or they could just kind of fell apart.

Thomas: by the way, I don’t know how connected the, um, the FORCEDENTRY story, which is the public Citizen story, and the thing that prompted the update right now, um, how closely connected that is to the MIT technology review story about Optiv and Accuvant. there’s like two kinds of long running stories right now about weaponized, you know, weaponized and marketed vulnerabilities.

one of them is the NSO story about how there’s a product company in Israel that you can, you know, governments can just go buy the stuff from, and, you know, journalists and stuff like that. Right. The other story is the one about a group of ex-US intelligence community people, who went to work as quote unquote mercenaries, which I’m fine with, with that characterization.

So we’ll just call them mercenaries from now on. Right. But they went to work as mercenaries for the UAE, for a specific team that the UAE put together, and themselves acquired and weaponized vulnerabilities, um, like the, the team that they managed, according to the DOJ filings and according to the MIT technology review story.

Okay. What I was doing some amount of their own kind of, implant development work and weaponization work and stuff like that, on their own. it would be weird if they weren’t interrelated somehow, like at the UAE team, for some reason, it wasn’t working with NSO, um, in the DOJ filings, there’s two companies that this UAE mercenary team— those mercenaries of are all pleading guilty or something like that.

Right now, to felonies for breaking ITR and stuff like that. Right. Which has its own kind of interesting story.

Um, but in the filings that they apparently sourced vulnerabilities from two different companies, a US company. Oh, I guess the other one can’t be NSO because they’re both a us company 4 and us 5 and NSO is not a us company.

So yeah. I don’t know what the relationship is. I have a bone to pick with the, um, with the MIT technology review story though. Um, that, that story kind of centers around the role of Accuvant and Optiv in the story, where like the reveal is that one of the companies behind these vulnerabilities is Accuvant, um, that Accuvant sold to this mercenary team, you know stuff to let the UAE target journalists with, which I’m sure, by the way, it’s true. That’s not my bone to pick with a story, but if you read the story, you’d get the impression because they wrote it this way, that Accuvant is a small team in like Denver, Colorado, um, that works on, you know, iOS vulnerabilities.

And that’s what Accuvant is. But as you’re in the field, you know, that Accuvant is not that at all. Accuvant is a giant consulting company. They’re one of the larger kind of software security and network security consulting companies. They’re called Optiv, now

David: and you are in Denver, you’ll know that one of the larger buildings downtown is the Optiv one, possibly the tallest. I’m not sure.

Thomas: That makes me sad. Right. So, I mean, I think there’s like, there’s a whole reputational thing here where like— so I think like the reason this is interesting is because a lot of people work for Optiv. like it’s a totally mainstream normal place to go do security consulting work. Um, I knew they had like an exploit development program.

I’ve got friends that have had like contacts with it or, you know, worked with it or whatever. I didn’t realize that they were doing that for, you know, the, the quote, unquote intelligence community or the green market intelligence community. People have kind of pointed out that if you’re a really good in this market, like if you’re, if you’ve got contacts and you’re a credible organization that builds and weaponizes vulnerabilities and sells them, to like the NATO markets, right.

That there’s a, you know, there’s a whole pipeline of good contacts that you work with there. So you don’t have this problem. And it’s kind of a tell that maybe you’re not good at doing this work if you end up selling to shady mercenary outfits in the UAE, because like the big time people that do this work for NATO players, right, the sense I get from people talking to me about this is that that’s not what happens. Either way, though. Right? Like I don’t know if that you worry about it again. I feel like Accuvant, Optiv getting some of their just desserts here. Right. But like, It would not be fair to assume that any random person that worked at Accuvant had anything the hell to do with this, right?

Like most of the people that do software security at Accuvant test web applications, like for, for, for the people that built those web applications, um, they’re are not a tiny company in Denver that just kind of specializes in shady iOS exploits. and like the article itself, the MIT technology review article, talks about how extensively the DOJ filings kind of established the role that Accuvant have in this.

But if you look at the actual filings, it’s like, you know, a couple of sentences saying, "okay, there’s this us company 4 that sold us, this exploit; the exploit, what wasn’t usable as an implant, uh, you know, basically, you know, it told the user that they were compromised", and then like, it’s the kind of thing where you would build that to test the device for like a red team exercise or something like that.

And then they took the red team tool and of course, you know, changed it, so it would be an actual weaponized exploit. Either way though,

David: group bought like the pen testing tool and then paid two random people that previously worked at Accuvant to turn it into a real exploit?

Thomas: Yeah, either— I mean, it’s, so it’s horrible behavior, right? Like it’s, um, it is still on the management of Optiv, but in fact, it’s more on the management of Optiv because they’ve kind of, they’ve managed to tar a lot of people that do kind of totally benign work. just kind of professional software security people, with this brush of also, you know, trying to get journalists killed.

Right. Um, it is, you know, it’s fucked up that Optiv management, let them do that. Um, that, that was a decision they should not have made. Right. But if you’re reporting on this stuff, you should get these kinds of details right.

David: did they do it, within their capacity as Optiv employees? Or like as independent contractors?

Thomas: This is in their capacity as Accuvant employees. So the timelines here from like 2015 to 2017, and at that time, Optiv was a big company.

David: Yeah. Yeah. I mean, people, especially message board people who Thomas loves, like, freak out about, "oh, like they’re going to be coming for my black hat training." It’s like, no, like it’s perfectly fine to teach people how to write exploits, to write exploits, and to like even sell exploits. But like, what you can’t do is like provide support or build something for someone that you specifically know is doing something illegal.

Like yeah, you

Thomas: I’m a little, I’m a little fuzzy on that, actually. Right. you know, I kind of wanted to come into like a thread like that guns blazing and saying, "of course you can teach exploit development to whoever you want". Right. But they’re charged under ITR violations. Right. They’re under export controls. So there might be some threshold of training passed, which you’re no longer teaching people things.

And now you’re actually transferring, essentially arms. Um, I don’t know what the threshold is there, but it’s an interesting question, right? Like I would be, you know, I’d be careful about doing that kind of work with, you know, people overseas. thankfully I don’t do that kind of work. Right. But like if I did, I, that might be a thing I’d be careful about, and it might be tricky to find a lawyer who can give you a good answer on that too.

David: Yeah. I mean, I, wouldn’t, not that I’m, competent enough to, to write actual exploits, but like, I wouldn’t do it with anyone that wasn’t like in the U

Thomas: it’s like, it’s not hard to find people that will talk to you about Accuvant or Optiv right. We big company, lots of people work there. Lots of like pretty noisy people work there. Great, good noisy people. Right. or have worked there in the past. So like there are journalists that I’ve talked to that are really good at this.

Um, you know, uh, you know, keeping contacts in the field and, you know, talking to people and giving good sourcing. And then there are journalists who are not as good. and I wonder why people don’t take the time to just ask around and see if they can get a conversation about, like, for instance, what is Accuvant, right? Or what was, what was Accuvant in 2015?

David: minute. Thomas, are you advocating for ethics in security journalism?

Thomas: This is like this, this is the second time this has happened to me. Like this happened to me with Eric Raymond last time. And now it’s happening to me now with Gamergate. So. Thank you. I’ll shut up now.

Deirdre: on your, on your previous question about whether anyone has analyzed the patch, this link from objective see, they have definitely gone deep into looking at the specifics of the patch and any of the other multiple functions have been patched, in that. So I’ll add a link to the show notes.

Related to Apple, we talked about Apple’s proposed client side scanning system to try to look for child abuse imagery, known child abuse imagery. And after a lot of consternation in the public discourse, they have announced the other Friday, of course, because they announced it on a Friday and they, uh, or Thursday, I forget.

And on a Friday they said, they’re going to pause, on rolling it out for now. and then they had their September product announcement with a new iPhone and a new this, and the new that.

David: and no new M1 MacBooks.

disappointed

Deirdre: yeah, I th I was like, am I going to have a MacBook pro that I can buy? The answer is no, I cannot, you can get, uh, an iPad mini with a USB-C port, which is great because I just bought an iPad and I had to pick only two models that had a USB-C port.

And so I had to spend extra money just for that USB-C port. but yeah, at that big, you know, hoopla that they do, there was no announcement of any end-to-end encrypted, iCloud, which kind of makes sense because the way that they would get away with announcing E2E encrypted iCloud as if they had this client-side scanning in their back pocket, so law enforcement wouldn’t get mad at them. So that happened. Okay.

David: didn’t even announce when iOS 15 is coming out.

Like we still don’t have a date for it. There’s new phones. And like, maybe not even an operating system.

Deirdre: Yeah. I’m actually concerned about that because not even this bug that they patched this month, they patched, but more recent ones that had to do with, you know, circumventing BlastDoor and things like that. And people are like, Hey, are you going to make this better? And they basically kind of, you know, kind of hemmed and hawed and said, we’re going to make improvements in iOS 15, which is coming soon.

So we would really like it to come soon, but we don’t know when soon is.

David: The betas are out. I don’t know if people have taken a look at the binaries and check to see if anything is different, but,

Deirdre: Hmm, cool. So that happened. That was fun. Please release, uh, iOS 15, for singular iOS device and any patches to macOS. Cause I have several Macs.

What else? The last week was fun because Chrome also patched some zero days. they claimed that theirs were also known to be exploited in the wild. Two of them were, an out of bounds write in V8. V8 is the JavaScript engine that they use in all Chrome, Chromium’s, Chromebooks, crummy. This my Chrome cast over there is running on ChromeOS.

So anytime you run JavaScript, that’s what V8 does. It does the running for you. and then, uh, use after free in index DB, which is the, one of the databases that you can use from your browser, from your web app. yet more, zero day vulnerabilities that would probably not exist if not for the use of memory-unsafe languages.

So yay.

David: Yeah. And I just don’t really see V8 getting much better. Like, I don’t think it’s a total tire fire, but

like there’s gonna be, you know, an 0-day, a quarter or whatever. and as long as it’s written in C ++ um, ¯_(ツ)_/¯. While we’re on the top of the Chrome though. Can we pour one out for the Chromecast ultra 4k?

And it’s not— like there’s not even a 4k Chrome— is there a 4k Android TV? I don’t think there is the one that I have doesn’t even do 4k

Deirdre: there is this bullshit, like Chrome cast with app— Google TV. It’s Google TV.

It’s

David: I have one cause they gave it out, for free to YouTube TV customers.

Deirdre: Oh fun. I hate it. Because it’s not ChromeOS or whatever, ChromecastOS. It is Android TV, with some weird compat, but it doesn’t quite work, chromecast layer. And I literally got a new, used I think I got an used 4k ultra Chromecast off of eBay without the power adapter. And it yells at me that I have the wrong power adapter because I do not like the Google TV.

And I, I think the only way you can buy a new one is if you go to the New Zealand uh, Google store

and you order it from

David: so despite complaining that it’s gone, um, for reasons I, I have one that I’m not using, so I could probably send you the power adapter. This is exactly, this is the content people crave!

Deirdre: I love Chromecast. I love that, it is— like, I love that it patches itself every night and I see it do it, and I love it. Yeah. So bring back the Chromecast ultra, or 4k, or just ChromeOS 4k, swap it out with Fuchsia! Cause I know you’ve done that with one device. I love it. I will, I will run it.

It’s great. I’m gonna get sidetracked on Fuchsia if I, if I don’t stop. So I’m going to stop. Um, because we were looking at some of their, uh, Rust, uh, dependencies for crypto. We have suggestions.

David: I would just like to note that, um, I believe the term is "friend of the pod", uh, Chris Palmer, has switched to working on Fuchsia from Chrome.

Deirdre: Yeah,

David: that’s certain that that is a negative for Chrome, but I think a positive for Fuchsia.

Um, so maybe we’ll drag him on here once he set some time to settle when he can tell us all the cool stuff

Deirdre: so exciting. Oh, I’m I’m I have so many questions.

Thomas: I’ve got nothing. nowhere to go with this, but I’m just reading the, uh, the Chrome security update here and noting. so one of the vulnerabilities was use-after-free. Um, that was, uh, by Marcin Towolski of Cisco Talus. So congrats to Marcin Towolski at cisco talus. Note, this is Cisco Talus finding good browser vulnerabilities.

a couple of different vulnerabilities from Theri, which I, I I’d like to know more about Theri. Um, I guess there are Korean security research, you know, consultancy or company or whatever. Um, two different researchers there, one on the out of bounds memory, like I’m seeing like out of bounds memory write and assuming that those other really bad ones.

Right. there’s and then there’s a couple of vulnerabilities from the, I don’t know how to pronounce it. It’s like Lu university, O U L I L D university, the secure programming group, which is apparently a bunch of badasses. Cause a couple of different researchers there. Also high severity vulnerabilities, um, multiple in this release.

So, um, yeah, liked that people are giving credit. I like that I can kind of keep track of who’s doing good work here and I guess pay attention to Oulu university, as always Cisco talus. And I guess I needed to go poke into, you know, what’s going on with Theri because sound pretty awesome.

Deirdre: Yeah. And then the, uh, the two that were cited as, there were exploits in the wild, a RFI reported by anonymous. So

con thank you anonymous. Thank you very much for making

things

David: that Anonymous.

Deirdre: No, not,

not the collect, not to the Anonymous collective blah, blah, blah, some, some anonymous, uh, bug reporter.

Thank you very much. All right. Everything’s on fire patch, your chromes, patch, all of your Apple software, please and thank you. Uh, it’ll keep you and your loved ones secure.

Thomas: My mom was forwarding patch yer device stuff on Facebook. So I think the cat’s out of the bag on this one, people are probably not getting that much value out of this,

Deirdre: Well

still, you know,

Thomas: I will. I will. I will echo my mom.

Deirdre: And it was going around on Tik TOK. Like several people are like— when there’s a big Apple patch, I think people are fucking getting it, it’s got that collective knowledge at this point, which is like both good and bad, I guess.

David: Deirdre do you get to feel like better than everyone now because you’re on a pixel phone and then for the longest time, People have been saying iPhone, iPhone, iPhone, iPhone. And you can maybe make the case. Now that pixel is a more secure operating system. I guess the downside is that your phone doesn’t work because it’s a pixel.

Oh. But like, uh,

Deirdre: what

David: maybe there’s less remote zero 0-days.

Deirdre: for a very long time, I felt mildly superior because there was all sorts of features That I could do on my Android phone that you just were not allowed to do on iOS. And since I just got this iPad, I’m like, why can’t I do blah? And this is like, you just are not allowed to do that. And I’m frustrated by that.

But now, I get the bonus of being like, "oh, I can do all this stuff. And it’s more secure." And I have slightly more choice about like, what fucking browser runs under the hood. I can have more than one. Wow. Like amazing. I can have something other than WebKit running under the hood.

I have, I have fucking six browsers running on this phone. It’s great. And not all of them are based on chromium. I

David: That I

Thomas: you’re really, you’re

really, selling me.

David: I, um,

Deirdre: so slightly. Yes.

Thomas: We’re both wondering if you, if you need some kind of help,

Deirdre: Because I have so many browsers,

Thomas: I,

feel like it’s not a thing that was missing from my life was lots of different browsers

Deirdre: I spent a long time as primarily a web application developer. And so I still have Opinions and a collection of browsers. And I would

Thomas: is one of them opera.

Deirdre: no, no, longer. Once upon a time, yes.

David: is opera Chrome.

Deirdre: it switched over to chromium, I think. Yeah. it used to be that you needed, you need an IE derivative, you need your Chrome derivative, you needed a WebKit derivative, and you needed, Mozilla and you needed, you know, opera because they were all different lineages.

And now almost all of them are— including Edge, Opera and, you know, Brave, and Chrome are all the same, lineage. They’re all chromium based. then, then Mozilla. so you only have the three now you have web based Chrome based and Firefox. And like that’s basically it, it’s kind of good. And also kind of, "meh".

Thomas: opera. It mostly exists today to be a fraud signal. So I wish I was, I wish I was kidding.

Hey, Paul, to view, um, we should have some secure messenger.

think we can have some opinions about, well, we could start with Matrix?

Deirdre: Okay.

Thomas: I’m having trouble fathoming, what happened there.

David: Yeah, so like, I, I haven’t dug into the protocol, but like the description of the bug is enough to make me think what the fuck is going on with this protocol.

Deirdre: Well, I don’t know if it’s the protocol because it seemed to be a vulnerability in their SDK, so.

David: Yes, but the, the bug should not have been writeable in a situation where the protocol makes any sense. So as far as I can tell, like that there’s a feature in Matrix that lets you like export a key from one of your devices to another one of your devices that you can get message history which you could debate about like whether or not that’s a good feature.

I think it’s fine. But if you think about like how this should work is like you have a known set of devices and you need to get an authenticated message from that device, that says, "send the key" to that device from, you know, whatever device it gets into. and somehow there was a bug in Matrix where like, you could take a message from an old device that you had removed,

so like you had some device, you removed it, somehow an attacker could spoof a message from the old device and get you to send them, their message keys. and I just like, don’t understand how, like the first step of the protocol isn’t, "who was the center of this message?" And then like, "what is the key for the sender of this message?"

And then, "does it work under that key?" Like, like I understand, you know, inner versus outer bugs of where, like the person who you authenticated as isn’t the person that’s like trying to do some action and things like that. But I just don’t understand like how you build a protocol where the "share keys" message has the opportunity to do that. Like, you know who

the

Thomas: see. you see. it’s not a big deal because exploiting this vulnerability to read encrypted messages requires gaining control over the recipients accounts. Ergo. It requires either compromising their credentials or compromising their home server. You have to compromise the home server to actually exploit this vulnerability, David.

David: Isn’t that the exact threat model that it’s supposed to defend against? The home server being malicious?

✨I, I don’t think you understand, you have to compromise the server to do this.✨

Deirdre: it’s supposed to— that’s the whole point of the end to end encryption.

It’s either it’s supposed to fail-closed, not fail-"give me all your keys".

Thomas: I think Matrix is kind of a cool project. I think it’s like, um, you know, they’re, they’re trying to do encrypted IRC with like roughly the ergonomics and like the federation model of IRC. So like there’s a notion of— unlike Signal where Signal runs the servers for you. look, you could theoretically build your own Signal server, but like,

Deirdre: I think their protocol, which they called Olm, which is, it’s basically a follow on to

the Signal protocol with kind of this other stuff on it, that supports this kind of Federation thing. yeah.

David: I like the idea of it as well. it’s just, I don’t know, like, I don’t know what’s going on with it. I don’t know anyone that works on it, which like, I should know somebody that works on it. Like, um, it’s just, it’s like a little pretentious, but like at the same time, like if you said such and such as working on Matrix, I probably should have at least heard their name at some point.

It’s like, that’s kind of how I evaluate TLS libraries. Uh, and I just don’t know. So I like the idea though, cause I’m just really frustrated with Slack. and, and like, I wouldn’t want to use Discord at like a company,

but

Deirdre: I think someone from Matrix is collaborating on the MLS spec with, uh, other people, which is basically trying to do end to end encrypted style slack, like group messaging, think Signal groups, but

it’s actually scalable for like hundreds or thousands of people in your group messenger.

and it’s using these, you know, trees and, you know, more yet again, you know, Merkle trees to manage the state of all these groups of keys and people who add and join and they get ratcheted and everyone gets, you know, rotated and, and all that sort of stuff. I think someone from matrix is collaborating on that, but that protocol MLS is not olm, or megaolm, and I’m saying, O, L M

the whole, you know, being able to share keys, would give you the willies to, to have that implemented at all. even if you had a good reason for it, but, or you think you have a good reason for it, but yeah.

David: MLS is very cool. I think it’s— I mean, I haven’t had to implement a secure messaging thing, so I can’t like say with certainty that it’s well done because I don’t know what would come up when you actually try to implement it, but it looks very well done.

Deirdre: they’re nailing down all the shitty cases of a large group end to end ratcheted messenger. they, there’s a nice implementation in Rust for the protocol. they’ve been working on the spec. I see updates every week, if not day. it has to go through the IETF process, which I think we all have opinions on, um, that’s the way they’re doing it, but they’re doing it.

David: Yeah, I think the main downside is just, no one uses it in a like, product at the moment.

Deirdre: Yeah. Uh,

David: it, but like, there’s not like the MLS messenger

Thomas: on the upside, it’ll have a remote debugging feature with heartbeat messages soon.

David: Oh man. Uh, Richard Barnes has been driving a lot or at least he was driving a lot of the management of that list. And like, Richard Barnes is not the type of person to say yes lightly. Um, I was at RWC couple of years back when I was in San Jose, I want to say this would have been 2019 maybe. and some like blockchain person came up to me and I happened to be talking to Richard and, um, Eric Riscola, like author of the TLS RFC.

And they started talking about blockchain stuff and I opted out of the conversation, but this person, like you could not imagine a worst fate for like this blockchain person that perhaps did not know a lot about the actual underlying cryptography getting stuck with like Eric and Richard, um, and getting grilled, like he’s on an IETF mailing list.

But like when I left, there were not many people left at like the happy hour and somehow they were still talking in a corner and I’m just like, this poor soul,

Deirdre: Like you’re either having a great time or the worst time. Um, to correct myself. It’s not Matrix. It is people from Wire, the Wire messenger who are collaborating on MLS. Um, and I just threw a link in our show notes on MLS. MLS is cool. Richard Barnes is now at Cisco. like if this gets deployed into some sort of like Cisco, like group messenger, that’s pretty cool.

you know, and then maybe other people will like absorb that into, Slack. I don’t know, man. Slack is like a Electron based app or a web browser based app and it’s already janky. And so I don’t even want to think about adding, uh, you know, messaging layer security to Slack as it exists because the web app, without any of that is already giving me grief.

David: that’s just, it’s just not going to happen. That’s not a feature anyone that pays for slack wants.

Deirdre: Well, people pay for wire deployments, I don’t know if there’s a thing that Amazon has made. That’s just basically like recycling Wire or something like it. because they want secure comms, but they don’t want to use Slack. They want it encrypted.

Thomas: there’s like chime

David: chime. Chime is just self hosted. I don’t think it’s

Thomas: yeah, it’s secure in the sense that you control the servers. Right. But it’s not secure in the sense of like

David: and you can deploy chime on your AWS account, which is ver—, because you don’t want Amazon to run it. And you want Amazon to run it as a service in your Amazon services account.

Deirdre: steps

David: Yeah.

Deirdre: You want Amazon to run it with extra steps.

sorry about the bug Matrix. thanks for fixing it.

David: and good disclosure. I think.

Deirdre: Yeah. Yeah. everything that I saw seemed reasonable.

Thomas: We had some followup to the, JWT conversation we had with Jonathan Rudenberg. I gather there’s a PASETO update. So after we did the JWT conversation with, Jonathan, I wrote a long blog post, which basically just plagiarized the conversation that we had, which is a thing I do. Just be aware that that’s, that’s gonna keep happening. And, I kind of did a breakdown of the different token formats and I gave some prompts to, you know, kind of the protocol token thing that you guys had been talking about. I doubt anyone’s going to use it, but still, I was mildly critical. I’m more than mildly critical of the overall concept, not PASETO itself. I think PASETO is a fine realization of these JavaScript tokens, but I don’t love JavaScript tokens. I kind of didn’t see it like a great place that, fit into the ecosystem of like different token stuff. And I pointed out that Neal Madden in the, CFRG again, my advice to the PASETO people is don’t engage the IETF with your custom token format thing.

No good will come of it, but I guess some good did come with it in that Neil Madden pointed out that they had the HMAC versus public key confusion, vulnerability of the same one that, uh, that JWT had. And, uh, I guess, Paragon has responded by trying to mitigate that. What do we think of the mitigation?

Deirdre: it looks nice. well, there was two: the confusion, um, and the changes that came include, uh, that data,

Thomas: It’s interesting cause they like, they updated their documentation in two places. I guess if they had had those documentation updates beforehand, it probably wouldn’t have changed what I wrote. Right. Like they’d already kind of documented that the potential for that vulnerability existed, and my qualm would have been that the potential for that vulnerability should not exist.

but they also, th the interesting third thing they did is to have negative test cases now. Um, so that in their test suite, you know, they explicitly test for, "do you have this HMAC versus public key type confusion, vulnerability", which is cool. I don’t know how I feel about using test suites to mitigate vulnerabilities that don’t necessarily have to be there by design, but are now they’re by.

David: so I’m not sure. So there’s definitely like the HMAC/RSA confusion. And we talk about that previous episodes and you can Google it and learn how it works. It’s very straightforward. I don’t know that there’s like a, even if you tried that, there’s like a AEAD and ed25519 type confusion vulnerability, like I just don’t— or even AEAD and HMAC, maybe, maybe there’s a, maybe you need one side to the HMAC.

That’s probably what it is. but

that, that would make sense. and so if the two options are, you know, a modern signature and an AEAD, like you can’t really screw up your header and like that’s because the thing that you do is you take the header and you put it in as the associated data in the AEAD, so if someone that’s swapped out the header, like it no longer, uh, works correctly. so I guess the thing I would say is that the, the deprecating, the old versions spoke to me more than the negative test cases. Although I do like them because like, I just don’t think the type confusion really exists.

if you’re using an AEAD correctly, what’s, you know, is, uh, props to both PASETO and, uh, to AEADs, which are great.

Thomas: Yep. Um, I’m there with you guys. I mean, it’s, it’s definitely, it’s a, it’s a positive step. it was already a credible thing to use if PASETO was your thing, go with my blessing. Right. Um, but yeah, I mean, good response.

Happy to see it.

Deirdre: yeah. Thank you to the authors behind, PASETO I’m always a favor of negative test vectors, even, even if it’s not something like a JWT or a PASETO or whatever, but like, it’s not just sufficient to have like golden path test cases. Like "this should work",

you should have a thing, especially for compatibility. If you’re trying to do anything, that’s like an interoperable implementation of a protocol or, you know, a verification scheme or something like that. Not only should these things pass, these things should fail. And if they don’t fail, you are not compatible or you’re insecure or something like that.

So I am always Happy to see more negative test

David: and you should be doing that like and when you are using JWTs, PASETOs, whatever, in like some application, like your application should have a test somewhere that like, makes sure that the invalid ones or the missing ones, like don’t work,

Deirdre: yeah.

David: regardless of which library you’re using or which tokens format.

Deirdre: Yeah.

Okay. So related to, I don’t know, go cryptography and TLS and one of our favorite people Filippo, who was on our second episode. Go TLS. The default cipher suites provided, If you just use the TLS implementation in the Go standard library, has made some changes to how they pick their cipher suites, when they’re negotiating TLS. They’re all great choices. They are basically automating at least for like I am. I am not just because I know Filippo because I agree with everything that the go team did. these are basically ways of making a bunch of programmatic choices between how, like what key agreement you choose, what blockcipher mode you use, what, like, you know, HMAC or hash function you use, depending on the other parameters of the client and the server negotiating this TLS, session. for everything below TLS 1.2, you’ve got a bunch of things you have to negotiate. And part of the great thing about TLS 1.3 is that you don’t have as much stuff to negotiate because it’s just kind of picked by TLS one dot three, you have a much smaller set and they’re all good choices. For TLS one dot two and below, they’re not also great choices.

So Go basically did is automate the sort of decision tree based on the parameters of the session, to pick the good stuff.

Thomas: One way put it. That’s one way to put it.

Deirdre: one more. one more point is that they basically encoded what the Mozilla, uh, SSL config web app has been doing and providing for a very long time, which is like, give me information about your clients and your server and the things you need to support for your TLS.

And we will spit out a config for like Apache or whatever it is to do the right, like top to bottom first priority, second priority, third priority of, TLS config, to get the best security for your, uh, performance and device, choices. And basically Go took that same sort of rubric and encoded it into their Go cipher suites.

I think

this is good.

Thomas: Uh, yeah, like a good rule of thumb with go cryptography is whatever Filippo says you should do is what you should do. You’re like, it’s, it’s pretty straightforward. Right? Um, I can tell you how the internet sees this, um,

Deirdre: I just spit

Thomas: that they took our freedoms! Precious freedoms! There’s a whole, like, there’s a whole thread, like this breaks, the go compatibility promise.

I, I’ve kind of like only one thing to say about this, which is, um, at least a couple of different people complained that— so like go is removing some of your flexibility in controlling cipher suites in a go TLS, which is a good thing. Right. Cause they’re right, and they’re thinking about this more than you’re thinking about this.

Right. But there are people that build test tools, using the go standard library, that need precise control over what ciphers and stuff they’re using. Cause that’s one of the things that testing, right.

David: That per, that includes me. I’ve done this.

Thomas: I just want to, I just want to put a word in for the right way to do this.

Somebody said like, "well, what do you expect them to do? Fork the TLS library in the standard library?" And it’s like, yes, yes, that’s what this is. This is what everyone does. So if you haven’t done this yet, people complaining about this change in go, the next thing you should do is vendor out the TLS library and fork it and control it yourself.

Everyone who’s ever written a serious TLS testing tool with go, even before any of these changes happened was already doing this just because like, there’s so much, it’s such a great library, the go TLS library. Cause it’s very easy to change. It’s very easy to muck with and add instrumentation to, and it compiles quickly and there aren’t dependencies and it just works.

It’s a great, great basis for building tools and stuff. But yeah, like thing that makes it great is you can just forklift that out of the standard library and make whatever changes you want. So I just want to put a word in, like, if you’re complaining about this, you’re missing the point of this library.

David: Yeah. And I’ll take the opportunity to plug the Z crypto library, which is underneath the Zmap, org on GitHub, which is exactly that. I don’t think it has TLS one, three at the moment, which is a little embarrassing. I haven’t worked on it in a while, but I think someone is actively working on that.

but if you need some export ciphers, um, uh, or you need some RC2, um, uh, credit to Tom Thomas, his implementation of RC2,

Thomas: Silence.

David: um, Hey, you got a bottle of gin in exchange for licensing an RC2 implementation so that some lawyer would shut up.

Thomas: I hate, I hate all of you so much.

David: I had to check a bag when I flew to Vegas to bring you that, that bottle of gin.

love gin and I appreciate you, but I also, I hate you till the ends of the earth.

did he make you well, no, you already wrote it and you had to

All I did was write RC2 and post it on GitHub and then all this horrible stuff happened because people used it.

✨That’s what you get ✨

"Look, here’s some random crypto code on a page. Let’s use it."

I mean, that’s, that’s how the internet goes, right? Like.

Hey, that library is why we were able to measure the DROWN attack, which if I recall is one of your favorite things on the internet. Maybe you can explain to me how it works. One day.

it is, it is, it is my favorite TLS attack. Bar. None. Right. I it’s also, I think it’s the reason why I have like the Arctic code vault icon in my thing is

Oh yeah. Oh, That’s funny. It’s going to be preserved for all time. Um,

it for my legacy.

Deirdre: I think one of the last things we have for today: there was, a cloud vulnerability in Azure where cross tenant jobs on their instances were exposed. Kubernetes cross tenant, cosmos DB, cross tenant, and, remote code execution in VMs by removing an authorization header.

Thomas: Did you see the vulnerability?

Deirdre: no, please do.

Thomas: Oh, it’s, it’s wonderful. That they run. I don’t, I don’t have all the details. I didn’t take the time. my wife, Erin spent a lot more time looking at this than I did, and she’s, hat tip to her a tipping the off about this. Right. But, um, I guess on the Azure, these, all these systems run like a different series of agents that are kind of the same way, I guess.

Uh, whatever the, I’m trying to remember. The thing in EC2 where you’re, uh, you can run tasks instead of just jobs, but that also involves a agent. but anyways, they run the agents on all of these machines. Cause um, agents are always great. And I’ve been saying that forever. and one of them is authenticated.

There’s an authentication header. It’s HTTP is the protocol that it speaks. And, uh, you sent an authorization header with, I think, a token in it. Right. And the vulnerability is— wait for it— you can just not send the authentication header ,and it just fails open.

Deirdre: Oh no.

Thomas: I just want to say this. back in 2005, my friend Jeremy Roush had me like kind of work alongside him on a web application pen test.

I’d been a vulnerability researcher forever, but I haven’t been a consultant before. This was like my, my first billable consulting project. And, uh, on that project, I’d never really done web stuff too. It was like the first web assessment I’d ever done before that it had all

David: did the internet even exist in 2005?

Thomas: Barely. But I remember I looked up what the web vulnerabilities were and I looked up SQL injection and I typed into the login field and the password field, I typed quote or quote code equals quote. And it worked like I logged in with a SQL injection on the password field, because like I said, it was looking passwords up in the database or something, whatever.

And from that point on, every time I was on an assessment, like for a long time, I believed, you know, it’s possible that if I just put quote unquote criticals quote into the password field, I’m going to be able to log in. Cause that’s a vulnerability that exists. Of course this is not a vulnerability that exists.

It’s like a heartbreaking vulnerability of staggering beauty. You’re never going to see it again in your whole lifetime. And I feel like the Azure agent vulnerability is another example of that, where like you’re never going to see a vulnerability as great as the remote code execution from just leaving the authorization header out of the HTTP request.

You’ll try it. Those people are forever cursed to try that vulnerability over and over again and never see it again.

Deirdre: you just like, I just, I just, maybe I might, I might see it one more day. God, Azure, Azure was never going to be like my top recommended cloud. If you need to use someone else’s computer to do your computing,

um,

Thomas: That would be Fly.io.

David: can you run an active directory stack for me, Thomas?

Thomas: I could try.

David: Because what I really want is a globally distributed active directory.

Deirdre: Jesus Christ.

David: Excuse me, Active "

Deirdre: Does it, Thomas, this is going to go in the code mines and like tomorrow it’d be like, okay, where do you want it?

Thomas: No, I’m just going to post on slack and Jason Donnenfeld will write it for me in two hours.

Deirdre: my God.

Thomas: That’s why it’s worked for me like three times now.

Deirdre: Jesus Christ. Powers. Great power. uh, I guess like, you know, this sucks. I really don’t like to see this from, you know, your cloud. What’s your trust trusting to not, you know, spin up these things on their hypervisors and not be failing open.

THe only thing I would really have to say about this is that Signal the service requires SGX to underpin several of their recently deployed services, including their private contact discovery and some of their group key management recovery stuff. And it’s underpinned by doing secure compute in SGX. And as far as I know, Azure is one of the few clouds that actually allow you to do anything with SGX at any kind of scale.

I think the other one might be IBM. so it feels one SGX is a leaky piece of crap. not even getting to the side channels. Like there seems to be yet another, really scary, just break, leak, vulnerability of SGX almost every year at real-world crypto or other places. Maybe— I forget if there’s one at black hat, very frequently, before you even get to the, the sort of side channels about like doing processing over, you know, secret keys in the secure, environment and leaking for someone else.

So when one of the only clouds that lets you use SGX is also just failing open, like this feels bad, just feels bad and it feels bad that, you know, Signal’s supposedly the most secure, private messenger, relies on this sort of infrastructure. So I don’t know, mitigate that? I don’t know how you mitigate that, if you’re already deployed on it.

So that’s what I have to say about that. Anything else?

Thomas: I feel like we’re, at our best at the ends of these podcasts when we’re all a little bit punchy. So like a pro tip from now on, it might just be to skip to like the last 30 minutes of these things.

Deirdre: Well, you have to get through the first to get to the end where you get all punchy. The journey is part of getting there. I don’t know.

David: do any of, you know, anybody who’s used IBM cloud?

Deirdre: Not for okay. Only because they had a quantum computer as a service thing and I’m not, I’m not joking. I’m like they have like a thing that’s like, you know, give me, give me a thing that’s like targeting this, like, you know, intermediate representation. And then we will like run it on our quantum computer or whatever.

That’s, that’s not a, Rigetti like they have an actual quantum computer. They have this whole sort of like SIM environment, like write Software that will target, you know, a quantum circuit. And then in theory, It would also eventually get run on a real quantum computer with qubits and all the, you know, all that crap. That’s the only thing I’m aware of.

Thomas: It’s a feature. I’m sure we’ll launch it Fly soon too. My, my, my partners at fly were at a company called compose IO that did managed Postgres that got bought by IBM. And that was the company they did just before Fly. So I do know people who have been involved in IBM cloud stuff. So if we have IBM cloud questions, I can bounce them off of Kurt and get them answered.

Um, so maybe we can ask him about Signal and

Deirdre: Yeah. Like my only question would be like, what is the SGX story of like deploying things that need to be kind of like at a cloud global scale distributing this sort of, it’s sort of like raft with secure computation and SGX and stuff. They have a couple blog posts about it, but yeah. like very curious if there are any better stories, about, managing that, because it doesn’t sound fun.

It sounds like a lot of work. So if they can do it any better, maybe that’s something that they can sell to someone like Signal.

Thomas: that feels like a lot of news.

Deirdre: Yeah. It’s been a little busy.

Thomas: I have, I have many fewer browser tabs open now, so thank you for that.

Deirdre: Yeah. Everyone patch your Apple software, your Chrome, your Matrix, and your Element and, you know, cycle your VMs on Azure or something. if they haven’t been forced reboot already.

Thomas: Run your PASETO test cases.

Deirdre: yes. if you are an interoperable version of PASETO tokens, make sure that you integrate those negative test cases into your builds.

David: and keep an eye out for the upcoming, restart, your fly clients, so that you get the active directory feature.