We’re back! With an episode on lattice-based cryptography, with Professor Chris Peikert of the University of Michigan, David’s alma mater. When we recorded this, Michigan football had just beaten Ohio for the first time in a bajillion years, so you get a nerdy coda on college football this time!

Lattices had some false starts at the beginning too; not as many false starts as Ohio state had last weekend, they didn’t have Hutchinson staring down in their face

This rough transcript has not been edited and may have errors.

Deirdre: Hello, welcome to Security Cryptography Whatever. I am Deirdre. We also have our co-host. David, how are you doing David?

David: I’m doing great.

Deirdre: Cool. We also have our special guest, professor Chris Peikert from the University of Michigan. Wait, is it University of Michigan, Michigan University. I don’t know how to do this.

David: It’s the University of Michigan.

Deirdre: Okay, good. I got the first time.

Hi, Chris. Welcome.

Peikert: you. Thanks for inviting me.

Deirdre: Yeah

Peikert: first podcast, I have to say. So if it sucks, you know, who to blame very first. Yeah.

Deirdre: Well, cool. We are we’re blessed or whatever. very excited to have Chris Peikert on the podcast today. He is one of my leading voices on lattice based cryptography and. Uh, I am an, isogeny-based cryptography fan and isogenist, but I cannot deny the power, the performance, the capability that lattices give to a lot of cryptographic protocols nowadays.

So I can’t help, but just admit where, uh, my favorites, isogenies just, can’t really quite do some of the things that lattices can do with the speed and the, you know, you can’t do fully homomorphic encryption with isogenies as we know of right now. So, uh, have to hand it to Chris and all of his research and his collaborators on helping push forward the lattices.

I have one thing off the top of my head because I’m mentioning isogenies, you had a pretty cool paper, "He gives C-Sieves on the CSIDH" that was using lattices in that Kuperberg-based attack against isogeny construction?

Peikert: There, there are lattices in a couple of ways in that, in that paper that you referenced. yeah, so they show up, even in, isogenies they show up kind of everywhere. You can’t avoid it. Um, you know, in cryptography they’ve showed up, uh, I don’t know, in the early nineties and late eighties, they were showing up in attacks all the time.

And that’s, that’s kind of how they show up in, in this, uh, the work that you mentioned as well, but, uh, they can be as constructively to

Deirdre: oh, yeah.

Um,

David: show up in factoring RSA, um, as well, if I’m not

Peikert: yeah, like RSA with a small decryption exponent, you know, it can be attacked using lattices. And we can talk about that a little bit more and yeah, so they’re, they’re very versatile.

Deirdre: For that, uh, "C-Sieve on the CSIDH" paper. I just remember it being B also, it’s a good attack. Being yet another knock against these class group constructions out of, isogenies and it seems like I am showing my bias here, but I think the, isogeny graph supersingular isogeny graph-based constructions, I guess, SIDH and SIKE seem to be ke—

people keep trying to cryptanalyze them and they keep getting stronger? Almost? They keep being— the original parameters seem to be a bit, a bit aggressive so we can bring them down a little bit. Whereas with the class group stuff, like you gotta keep bumping up those parameter sets. That’s because of, in part attacks like this paper. Do you think there’s— they’re so attractive, because you can slot them into like commutative uh, protocols, like a regular Diffie-Hellman or, uh, or anything like that, that requires commutativity that the SIDH/SIKE stuff doesn’t really give you, but it just seems like they keep getting weaker. Do you think there’s a hope there, or do you think the structure of these things is just a little bit too much for a quantum

adversary?

Peikert: The that’s a good question. I mean, the, the "C Sieves on the CSIDH" uh, you know, the title fkind of picks on CSIDH but it actually, the attack applies to any of these commutative uh, group action schemes, which, you know, go back to, uh, , you know, Couvegnies unpublished work in the late nineties and CRS after that.

so it, it, it’s sort of very generic and, uh, it’s just kind of using these Kuperberg-style algorithms. what was interesting or novel, or I guess hadn’t really fully, fully been explored before, in this work was that, some of these Kuperberg algorithms allow you to do a trade-off between how much memory you use and how many kind of quantum calls to the group action that you used and, uh, memory, you know, classical memory,

Deirdre: by the So

Peikert: the, the cool thing there was that you don’t need qubits, you just need classical bits that you can then access, kind of in superposition. So this is, uh, a model that has been used in a lot of the quantum computing literature. and you know, that kind of memory is, is a lot cheaper than, uh, you know, general purpose, quantum computation, qubits and things like that.

So, uh, what we showed in that paper was basically by kind of cranking up the classical memory, a little bit higher, you can really lower the amount of quantum computation you need.

and then it’s sort of just a generic trade-off, but we gave some examples like, oh, you can just do like 2^14 group actions Uh, with maybe a terabit of memory, something like to look into the tables, but terabit of memory and, you know, 16,000 group actions, quantumly lets you break this thing. So, so that was very much attuned, you know, very much requiring the commutative structure of, of the group action.

And uh, yeah, like you said, like SIKE and SIDH they don’t have that commutative structure. So there’s really nothing to be said, that we know of at least right now, you know, using these, uh, Kuperberg-type sitting algorithms against them, at least not yet.

Deirdre: Awesome. Thank you.

David: so for benefit of the listener and definitely not myself, who certainly knows what all of these things are. Could we maybe

define what a lattices is well as the difference explain the difference between the commutative CSIDH stuff, the regular non-community isogenies and then lattice uh

Peikert: Right. So this is uh the commutative property, is sort of like this. You have a group action. It’s just this fancy word for, uh, an operation that takes, a kind of a scalar value and a point, you know, some other kind of value and it gives you value that’s in– of the same kind as the second argument.

And so what you can do is kind of the usual, like Diffie-Hellman style thing where, everybody agrees on some base points. And, Alice chooses her own secret scalar and Bob chooses his own secret scalar and they apply, you know, the scalar to the base point and they trade it off, send it, send it to the other one, and then they can apply their own secret value to the public value that they got from, from their counterparty and voila.

You have this like Diffie-Hellman type thing. The secret key is now A— ABbasepoint. And so it’s like very much in the style of, of classical Diffie-Hellman but with these different kind of structures, you know, not, not cyclic groups, but rather, these weird, isogeny things.

So, you know, that’s both the attraction and then kind of a little bit of the downfall, because this, this commutative structure, the fact that AB times base point equals BA times base point, that’s the commutativity is what allows you to use these like very cool quantum, Kuperberg style algorithms and, you know, you’ll have to read the paper for that, because to be honest, I forget all the details.

been a couple of years, but, um, the, the basic idea is that you can run these cool quantum sieving algorithms, which allow you to, get the secret key from the public key and a, in a very cool way. And, uh, the commutativity is, is critical to making those algorithms work. so, you know, SIDH, and, and SIKE they don’t, they don’t work that way.

They don’t have that commutativity. They don’t have a group action in the, in the same kind of. way. the way that you encrypt or the way that you do a key exchange is sort of just fundamentally different in a way that I don’t actually understand all that. Well, uh, you Deirdre you’re you’re way, way ahead of me on that one.

but you know, th that lack of commutativity is preventing these like subexponential attacks that, Kuperberg quantum attacks that, that Kuperberg gave.

Deirdre: Yeah. And there’s like a hack. Well, the original… before it was SIDH it was like CRS. There was, you know, something very similar and, but the, it was kind of vulnerable to the, to this sort of thing. And then they moved to supersingular curves and they had a hard time like finishing the diamond of the like key agreement.

and the hack is literally to put these quote unquote auxiliary points, on your public. key And it’s just enough information for you to do this like, you know, commutative math, but it’s, isogeny math, to get to the shared secret, or at least the shared j-invariant and people have been worried that those auxiliary points was enough extra information to leverage for some sort of attack like this to, to give you just enough commutativity to do something like this.

And it’s been over 10 years and it’s not quite, there’s been a couple of little attacks, but nothing really, uh, showstopping. but yeah, it’s a little bit of a hack to give you just enough commutativity to, to come to a key agreement with SIDH and SIKE but it’s not, it’s not enough to do real composition the way you do with like, groups and group actions, in classical or, you know, uh, these group actions.

which is annoying. If you’re trying to recreate some of this classical crypto protocols in a post quantum setting, you can’t quite do all of them with, what SIKE and what SIDH like. You can’t do Schnorr signatures like with them. but yeah, it doesn’t have the same amount as the, these group actions stuff.

Lattices though, are different beast.

Peikert: different. Yeah. There’s a, I mean, where to begin on this? I have some pretty pictures. I mean, I don’t know if your

Deirdre: listenership

Peikert: uh you have a listenership of tens? If not, if not scores, right.

Deirdre: Dozens!

Peikert: All right. dozens of dozens, as, as in pictures I could put up and just kind of, you know, anybody who’s listening only, I, I pity you and, uh, go to the video stream because you’re going to have to look at things, but I can kind of talk it through if, if that would

help.

David: can toss the links or the names of the um, or the slide numbers

Deirdre: in the show

David: the show

notes

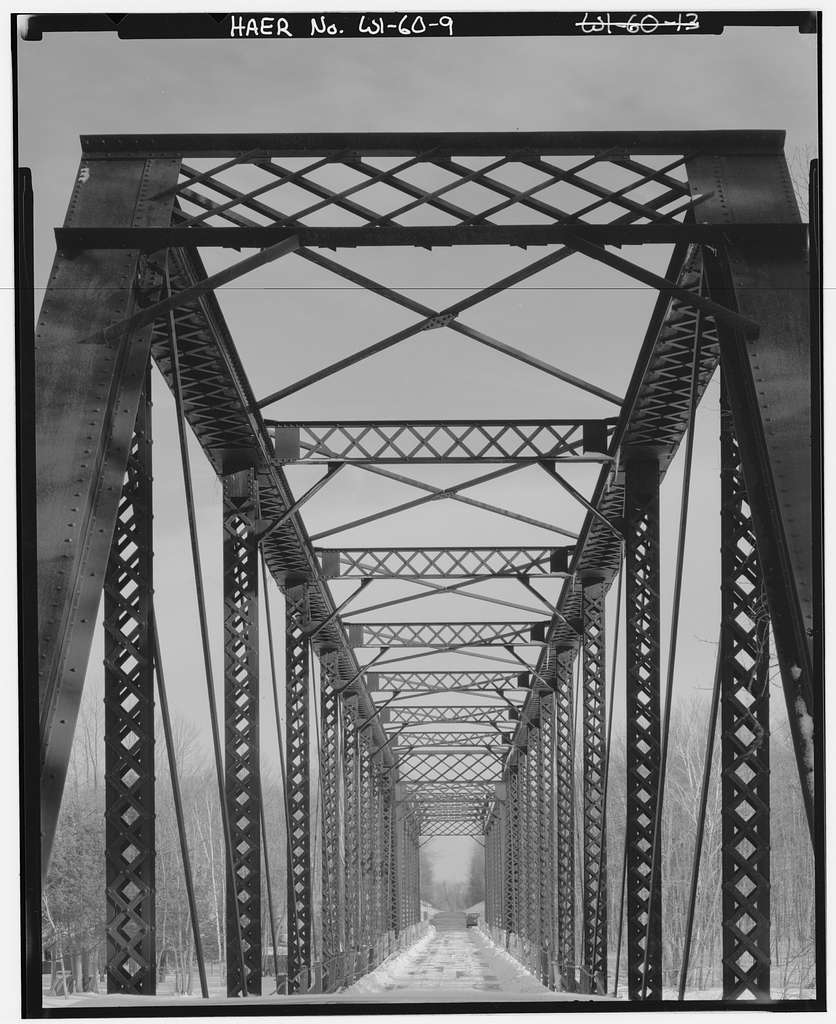

Peikert: Beautiful. All right. So yeah, this is kind of like, some pictures of, of lattices. I mean, you don’t have to read the formal definition. Like there’s a math-y definition at the top, top few lines. but really it’s enough to kind of just look at the bottom, the bottom here, bottom half and this middle, middle third, like what are lattices.

Right? So the integers just zero one, negative one to negative two, et cetera. That’s the simplest most basic lattice. So, you know, actually we’re teaching lattices to, you know, pre-kindergarteners and stuff. although maybe not negative numbers, but anyway, So more generally they are in multiple dimensions.

Here, you know, here’s a lattice in two dimensions and this is just the two dimensional integers. So this is a nice square lattice where we over the origin here, in the center. And then if you go to the right, you have to 0.10. And if you go up, you have the 0.1, one, and over to the

David: basically

a dot grid. Is that what I’m I’m

seeing?

Peikert: And, and, and this is like a perfectly square lattice, right? It’s like just a tiling of squares.

Deirdre: on

and it goes on

for

Peikert: forever. Exactly. So in, in all the, you know, all directions in these two dimensions, and if you’re, you know, a little more imaginative, you can think of the three-dimensional integer, lattice, where you know, it’s all the points, XYZ where X, Y, and Z are integers, maybe be any integers. So that’s, you know, that’s the three-dimensional lattice. And then, I can’t, I’m not very good at even envisioning three dimensions, but you go to the higher dimensions and that’s where things really start to get interesting.

That’s where we, we use them in cryptography. So like, this is a pretty uninteresting lattice, but like here’s a more interesting one. This is called a checkerboard, lattice or the chess board lattice, maybe you can figure out why any guesses?

Deirdre: that you’re yeah, your different chess pieces move

Peikert: Yeah. These green points would be like the white squares or the centers of white squares on the, on the checkerboard or chess board. And then these missing points here would be the centers of black squares. So you kind of have this alternating, you know, white, black, and then shifted when you, so when you go up, it’s shifted over and you know, white is on top of black and then black is on top of white here and so forth.

So, you know, this is a little more interesting lattice, although if you, if you kind of stare at it, you notice that it’s actually just a rotation of a square lattice. You look at it like at 45 degrees, this is still just a square lattice, but rotated.

right.

David: what, uh, to create this, we’re looking at basically one, one of the points in the two dimensional, lattice being even numbers and the other points being odd numbers Or is that what like 2z and 2z+1

Peikert: Yeah. Close. The rule here is actually that the, a point is in the lattice if the, uh, sum of the coordinates is even.

Yeah. So, this is one comma zero. The sum is one, that’s odd. So it’s not in the lattice, but this is one comma one, one plus one is two, that’s even. So it’s in the, it’s in the lattice.

So this might be called like the parity lattice as well. That’s another name for it I think. So, yeah, so that’s, that’s a slightly more interesting lattice, but still not super, complicated. But in general, you can have really, you know, different looking lattices that aren’t square at all.

They look more like this. So here, like we have all these, you know, the green points or the lattice points again, the gray grid is just for like kind of background. So there’s the origin. And then we have these kind of green points that are along this line. And then along these kind of parallel lines. But there, you know, the lines are spread out farther apart then the, the different distance between the two points on the, on the lines, right. Adjacent points on the lines. So this is another lattice and it’s not square, right? If you kind of look at it, you can see these parallelograms kind of, but that’s about it, right? There’s no right angles anywhere in here. And, The question you might ask is, well, what’s the rule for producing these green points, right?

And, every lattice has something called a basis, and a basis is just some vectors that are in the lattice that, generate the lattice, by taking, integer combinations of them. So integer sums or differences. So here’s a basis for this green, you know, there’s a lattice of green points. B1 is this vector and B2 is this vector. And if you take, for example, well, this point down here is negative B2, right? And at this point here, pop quiz, uh, tell me what it is.

Deirdre: uh, B1 minus

Peikert: All right.

Deirdre: and.

Peikert: So this is B1, and then you go backwards, minus B2, and you end up at, at this point, right? And so then this one would be like. Uh, oh, shoot. 2B1 - B2? Yes. Okay, good. So B1, here’s 2B1, and then - B2, it gets you to there. Right? And then, so every point on this, uh, every green point here can be expressed as integer times B1 plus integer times B2.

And, uh, I’ve well, I guess I haven’t marked all such points, go out to infinity, right. But you get the idea. So this is a regular structure that goes out forever.

Deirdre: And this sounds like it’s a very efficient type of computation for modern processors and arithmetic to

Peikert: Certainly. I mean, we’re dealing with vectors. They have, typically when we get to the crypto, they have small integer entries to them and their vectors. So maybe they have like, I don’t know, 14 bits or 16 bits each, and it’s a vector of a large dimension, maybe hundreds of dimensions. But when it comes to like, oh, we need to add up a bunch of these or, you know, add, subtract a bunch of these.

Like, that’s something that on modern processors is really fast, and indeed that we get really good speeds out of these things.

David: So how do, how is this different from just the regular vector if, if we you know, just taking a linear algebra course, you’d see the exact same thing. You’d have your vector space and your have your, you know, your points or whatever that are basically linear combinations of the basis set.

And you’d be doing all your stuff and we’d just be using the word matrix instead of lattice.

So

Peikert: Exactly. Yeah. Yeah. A great question. right. I mean, vector space has a basis, right? We even used, use the same words, which can get you in trouble sometimes. But, the, basically the only difference is that the points in the lattice are integer combinations of the basis vectors, in the vector space, they are, like real number combinations, or more generally like field, you know, the coefficients are from a field, maybe finite field or the field of the rationals or the real numbers, whatever.

Right.

Deirdre: Uh, So instead of, instead of like the it’s like, uh, the values are all over a finite field or something like that. That’s why they’re not the vector space. It’s a, it’s— or matrices uh,

Peikert: Yeah, if you want to get down to like, you know, the, the nitty gritty mathematics of it. A vector space, the multiples come from a field of some kind that you name, and a lattice, they come from the integers.

David: Do they have be the, is it integers or is it like a group or something? Some other algebraic structure or?

Peikert: You can use another algebraic— you can use the ring of integers of a number field, if you’re being really fancy. Um, and that’s, that’s definitely something we do, that gives you like algebraically structured lattices. But the key point is that it comes from some discrete, it’s the discreteness of the integers that matters here.

So it gives you points that are kind of discrete in space and So it’s a discrete subset of like a continuous, you know, real space. And, and so that’s really the distinguishing feature, right. That every point kind of is an island unto itself with, uh, nothing, you know, nothing continuous.

Deirdre: how do we use this to do cryptography?

Peikert: Yeah. So, well, um, here’s some cool pictures. The main thing, kind of the core problem that we use for lattices. So let’s just jump forward. Here is Okay, cool. So

Deirdre: Yeah.

Peikert: like— for crypto, you need a hard problem or you need a hard problems, right? You need something that’s hard for the attacker to solve, but easy for like, the decryptor to solve using some secret key, Using some trapdoor or something. So the hard problem, like kind of the most central hard problem on lattices is this uh short, short vector shortest vector problem.

And the problem is it’s pretty easy to state. It just says, I give you a basis of a lattice, like these two vectors B1 and B2. And the goal is to find a vector that’s as short as possible in the lattice. Except for zero. Zero Is always in the lattice. So that doesn’t count. You just need to find a vector that’s, as close to zero as possible.

And so in this lattice here, this point, uh, is a shortest vector in the lattice.

Deirdre: So, is this a, is this a basis that would change for like be a public key or like a, like a generator?

Peikert: Yeah, you can think like in a lot of lattice crypto systems, there’s a, uh, the public key is a basis. And the secret key might be some short vector in the lattice that was generated by the

Deirdre: Got it. Okay.

Peikert: And that would, you have to be very careful about how you do this, but, uh, you know, kind of have to generate a basis together with knowledge of a short vector or maybe multiple short vectors in the lattice, uh, that it generates.

And so, and that short vector would be, you know, your secret key that’s going to let you do some crypto with it.

Deirdre: If there’s like a canonical basis to generate the lattice, uh, wouldn’t there be a canonical answer for the shortest vector, and it sounds like, well, yes, but the basis is your public key. It’s not your public parameter of how to generate a specific lattice.

Peikert: Yeah. so it’s, it’s interesting. There’s a, over the years there’s been a, a big improvement in understanding, you know, what we can do with these and how we could design, these lattice crypto systems. The most sort of, first thing you try, or first thing you’ll hear in a lot of, uh, you know, papers or talks about this stuff is, your individual public key is some basis that you generate from scratch.

And it’s got nothing to do with anyone else’s, it’s just your own. Right. And you generate it and, you know, a short vector in the lattice, or maybe a few short vectors in the lattice and you put that out there and you say, okay, go ahead. And let’s, let’s encrypt right to me. But there, there are cool optimizations that have come about with better understanding where many users can actually kind of share part of the same lattice. they kind of have a common, public parameter, let’s say like a shared, shared a bunch of basis vectors, but then there’s also, you know, one or two additional vectors, which are their own. Right. and the secret key is sort of the short vector that they’ve kind of augmented, uh, onto the lattice.

and so that gives you a nice kind of, improvement in communication because your public key is now just what you augmented this common lattice with, right? Just one or two or a few vectors. And that’s— your public key’s much smaller that way, you don’t have to publish an entire lattice, and your secret key is still just, you know, a few short vectors in the augmented lattice.

Deirdre: Is this where, like Ring LWE and Module-LWE come in? Are those, those sorts of optimizations of like, you don’t have to worry about, the whole thing, you’re just sort of like inside this particular like group or structure in the lattice, we are, we are tweaking a specific module or a specific ring of like vectors in the lattice or something like that.

Peikert: Yeah. That’s a, somewhat of a different idea going on in these like ring or module, like algebraically structured lattices. So there’s, I guess there’s a few things we can say about that. One is that, in these like ring based problems, you can publish an entire lattice of your own. You can generate and publish an entire lattice of your own, and it’s just much smaller in the first place.

You know, if you think, look at this diagram here, right? It’s in two dimensions, you need two vectors, right? And each vector is two dimensions. So altogether, you know, there are four, you know, two times two, four coordinates or four integers that specify, uh, this basis. Right. But in general, we, we have to go to much larger dimensions have any security here.

So we’re hundreds of dimensions.

Deirdre: Oh yeah. Okay.

Peikert: So in hundreds of dimensions, You need to give, let’s say let’s pick 500 dimensions just to be concrete. So you need to get 500 vectors and each vector is 500 coordinates. So that’s a

Deirdre: pairs too. Yeah.

Peikert: Um, yeah, so that’s like 250,000 entries, right? 500 by 500. Uh, so that’s big, right?

Like, and each of those entries is, I don’t know, 16 bits or maybe more or something like that. So that’s really just too big, to do kind of and when it comes to, you know, if you want to be competitive with normal sizes of things. So, so the, the cool thing about, uh, these kinds of ring yeah. Ring based problems is you can still work in 500 or a thousand dimensions, but you only need to give one or two or a small number of vectors to specify it.

with these ring and module, uh, problems, you can specify the entire lattice, like in 500 or a thousand dimensions, you could specify an entire lattice with just one or two, or, you know, some small number of, uh, vectors. So maybe two vectors, each of a thousand dimensions gives you the entire description of this 1000 dimensional lattice.

So you basically say it’s like a factor of a thousand or a factor of 500 in how much size you need to specify, your public key. So that’s what these, these kinds of algebraically structured lattices give you.

Deirdre: got it. Okay. So it sounds like computing over these structures is quite fast, but the keys tend to be large, at least until you start using like a, like a ring-based or a module-based way to like stop sharing, literally all the coordinates of a like 500-dimension, you know, lattice or something like that.

Peikert: Yeah.

Deirdre: what, so what makes this, I’m talking about lattices because they’re, uh, theoretically quantum resistant. Well against an adversary with a large quantum computer that can run algorithms like Grover’s and Shor’s algorithm. Do you, can you give us like a, like a core insight to why lattices and cryptographic protocols based on lattices are fundamentally resilient to such, uh, empowered adversaries?

Peikert: Yeah. I mean, that’s kind of the billion dollar question. I don’t know. We can’t really say why any cryptographic problems are secure against whatever model of adversary you want. Like we,

Deirdre: Although isn’t there like a lower bound proof for certain lattice problems that like hardly any other class of cryptographic, system has?

Peikert: So we can say a few things, we can say a few things about lattices, which may or may not be relevant to the, to the cryptography per se, but here’s a cool fact: like this shortest vector problem here is NP-hard, in the worst case. Right? So this is not a proper like— factoring is almost certainly not NP-hard.

Uh, if it were, there would be massive, you know, unexpected ramifications, right. And same for discrete log. And same for, isogenies I think, right.

So we don’t expect those to be NP-hard. We, we have a proof that, uh, shortest vector problem is NP-hard, in the, you know, in its exact form. So when the problem is find exactly the shortest vector in a given lattice, it’s NP-hard. and that’s a, that’s a worst case problem. And it’s the— asking for the exact shortest vector in a lattice. So that’s not actually a problem that that is used directly in crypto. Uh, I mean, it’s kind of used sometimes as sub-routine and in attacks. So this is can still be kind of giving us some insight.

We could perhaps lower bound the, you know, running time of a particular class of attacks using the fact that it uses a solver for an NP-hard problem. Right. Or that needs a solver for an NP-hard problem. But these aren’t used directly in, in crypto constructions.

Um, what’s used in crypto.

Yeah,

Deirdre: There’s other variants.

Peikert: right. So what’s used in crypto is this kind of approximate, uh, shortest vector problem here, right? So.

Deirdre: Got it.

Peikert: you know, I’ve shown here this blue ball, which is, its radius is a lot larger than the distance between the origin and the shortest vector, right? So this is a relaxed problem that says, find me a vector in this lattice, which is like within a gamma factor, maybe a factor of a hundred or a factor of N within the shortest path, the shortest vector.

Deirdre: And this is where the "with errors", constructions come from. Is that correct? Where you’re like, you have a factor and you may not get an answer that’s within that factor?

Peikert: Yeah, you have, I wouldn’t say it’s a directly related to the, like the "with errors", the class of problems. this notion of like there being an approximately shortest vector that you have to find is you know, permeating all of lattice crypto, whether it’s, you know, LWE or whether it’s SIS.

So the entire, like throughout lattice crypto, in order to break a cryptosystem, you often don’t have to find exactly the shortest vector in the lattice. It’s sufficient to find one which is like pretty close to the shortest, or, you know, something like that. So, you know, the pro the actual problem you’re trying to solve is this relaxed, approximate, uh, you know, approximate, shortest vector or something of that nature. The flip side of that is, is like LWE or "with errors", where there’s some small error relative to a larger quantity that’s, that’s in the lattice.

Deirdre: Okay. So one thing that makes lattices just kind of amazing is that you can do a lot of— you can’t do everything, but you can do a lot of the things that we’ve been doing in classical crypto with like factoring and groups and things like that. And you can do that with lattices somehow, but there’s also a ton of stuff that you can’t do efficiently in classical crypto that you can do much more efficiently with lattices.

And one of those is, is homomorphic encryption or fully homomorphic encryption. Um, why, why are lattices is magical in this regard?

Peikert: I— that’s a great question. I’ve given a lot of thought to this. Uh, you know, I’ve worked on this fully homomorphic encryption, FHE stuff, uh, it’s been, I don’t know, a decade now that have been into it. And, it’s still a mystery, but I think there’s one thing I can say, that’s a bit different from the way that, uh, groups and such work, which, sort of maybe gives a flavor of why full homomomorphism is like, not completely insane of an idea, right? To of course in hindsight, but, um, and it’s this right:

so when you think of Diffie-Hellman, your secret is like some scalar and then you, you know, raise a generator to that scalar, or you, you know, multiply a base point by that scalar, right?

So you have a function that takes you from your secret, which is just a scalar integer, to some group. Okay. And, that mapping is a homomorphism. So when you add two scalars that corresponds to multiplying the corresponding values in the group, right? So, you know, we have additive homomorphism, and linearity, you know, since the dawn of crypto and people understand that.

But, what you don’t get is like multiplicative homomorphism, typically, right. Uh, I guess you can get it with Pallier, but you don’t have additives there. So it’s a sort of pick your, pick your poison. And with lattices the, the trick seems to be that the mapping from your secret to the public value, they’re both in the integers.

Deirdre: Uh, Ah, I see

Peikert: you know, you have a secret, that’s a, some integer vector, and then, you know, you kind of munch it up into, into a lattice, hide it in a lattice. Those are still integer vectors that you get out, and, so obviously the additive homomorphism works, you know, that’s almost trivial to see, like if you add the two vectors, they just get added in the lattice and things just work out nicely.

but you can do multiplication, right? If you kind of multiply the secrets in the kind of appropriate way, you still get integers out. And that actually corresponds to like a not completely crazy operation that you could do on the lattice vectors as well, that some kind of notion of multiplying them.

It’s a tensor product, who cares. But, uh, so, so the fact that you’re, you know, you’re mapping from secret integer to public integers, kind of preserves both the additive and multiplicative structure of the integers, in the secret domain and in the public domain. Uh, it’s kind of the best way I’ve found to think about it.

Uh, but it’s still a mystery, even with that.

Deirdre: I’m now officially jealous at the fact that they’re they’re so, almost consistent that like, it makes sense that’s that the homomomorphism would fall out of the fact that you’re, you’re not moving very far from the secret domain to the public domain and to what you’re operating over. Whereas like in, isogenies for example, it’s like, there’s like a secret scalar, you know, way down in the depths and on the top it’s like curves, but it’s secretly isomorphism classes of curves and secretly it’s J invariants and there’s like layers and layers of structure of like what you’re representing between what’s at the bottom and what’s at the top.

Um, yeah, no.

Peikert: So that makes it hard to see, like what kind of homomorphisms we might— I mean, you have some basic ones,

Deirdre: Yeah, no, it’s, it’s going to be really basic, like commitment stuff and, you know, whatever. And like, it’ll be some sort of like additive homomorphism and like the way it’s additive is literally you’re going to take a random walk in a graph and like, hope you end up at the same place. Like it’s really, really cheap and efficient.

Um, cool. So,

Peikert: walks are not even commutative. I will say though, the one thing, you know, that that gets you, like so, okay, because you’re entirely working with integers, you’re getting an additive and multiplicative homomorphism. The trick that you have to get over is that in all these lattice-based encryption schemes, you have this notion of noise, right?

So it’s like an inexact thing to— in order to encrypt, you have to add some noise and the noise is like completely essential to the security of it. So you have kind of, got your message there. And then you’ve kind of munched it up with some noise and embedded it in the lattice. And that’s the ciphertext, right?

So when you add two ciphertexts that corresponds to adding the two messages, but also adding two noise terms. So that always gets a little bigger. Okay, fine. You make room for it and make sure there’s room for adding two, two noise terms. but then when you multiply two ciphertexts, it corresponds to multiplying the two messages, but also multiplying the two noises and maybe the noise times the message and the other message times the noise.

So the whole game really becomes how do we deal with the growth of the noise and making sure that, uh, there’s enough room. And, uh, so that’s where like a lot of the innovations in FEH have come about and sort of techniques for keeping the noise under control. Uh, it probably heard bootstrapping and there’s other, you know, kind of more technical, uh, things there, but so the game just becomes like, how do we, how do we keep the noise under control as we do these homomorphic operations.

Deirdre: So. Yeah. So it doesn’t just blow up. Cool.

All right. Got to talk about the NIST competition. So we’re almost at the end and in the, the round three finalist for

public. Yeah, yeah, yeah.

David: what the NIST And I think there’s the KEM/DEM and the key exchange one, we could say what those are and…

Deirdre: so, uh, NIST: National Institute of Standards and Technology in the United States is doing a crypto competition.

They’ve done some of these before. This is how we got AES and uh SHA… whatever, several SHAs. and because there’s been so— all the SHAs,

Peikert: Well, not all the SHAs. Okay.

Some shots.

David: two of the SHAs

Deirdre: because there’s been.

So much diversity in the post quantum cryptography kind of literature, they started a competition a couple of years ago to be like, submit, submit your stuff for key encapsulation mechanisms, AKA kind of like public key exchange-ish, but not really Diffie-Hellman, it’s all kind of squishing it into this KEM format, uh, public key encryption and signatures. Submit your stuff. And it’s been years and several rounds, we’ve had round three and I think that’s going to be the last round and we’re going to have finalists within the next year or two, and a lot of the round three finalists for signatures and KEMs and public key encryption are lattice-based.

there’s lattice, there’s classic McEliece, still in there for the key establishment. And there’s some multi-variate based, uh, signatures and some other stuff, but like out of six finalists, four of them are lattice-based. There’s like Saber, Falcon, there’s some— an NTRU and like there’s one more, um, yeah, kinda touched on this, but like, why are why are lattices just kicking everyone’s butt?

Peikert: Well, not, not only are those, the finalists, but there’s alternatives, right? There’s a handful of alternatives in each category and a bunch of those are lattices as well. So, uh, yeah, there’s, there’s a lot, uh, a lot to like. Well, okay.

So why are lattice is doing so well in the process? a lot of possible answers to that. One is there are just so many knobs you can turn in these constructions. So. The lattice based KEMs at least kind of almost all fall into the same basic design template, but there are about 18 knobs that you can turn in, you know, 360 degrees to go from one extreme to the other. And, the. In the first round, there were multiple, multiple more, uh, proposals and submissions that set the knobs in certain ways that maybe didn’t turn out to be so great.

And, uh, and those were removed from, from consideration. but the ones that remain kind of all have, you know, the knobs turned in in certain ways. And some of them turn the knobs, like really toward like a conservative side where they have like, very little, if any algebraic structure to them. Some of them use, like we talked about "with errors" very briefly.

So there’s always this notion of error. Right? You add some errors to get security. The knob is how much error do you add, right. Do you add like, uh, tiny, you know, binary error? Is just zero one errors, or zero plus minus one error, or something like that. It’s about the smallest you could do. Or do you add errors that maybe are, you know, from minus 10 to 10, or maybe minus a thousand to a thousand, right?

There’s a lot of different, uh, ways you can, you can tune that. And, uh, you know, there are various attacks that apply to certain settings of the knobs. Uh, like if you set the error too small, but you also crank up the number of samples, then all of a sudden everything breaks. Okay. Well, none of these schemes give out too many samples, so should we, should we be worried about the size of the error being small?

I don’t know. I mean, the attack itself doesn’t work as, as we know it, but we try, we also want to be kind of far away from, the danger of borderline, right? Yeah, exactly. Away from the edge. So unless you like, you know, living dangerously,

Deirdre: really small sizes of all your stuff.

Peikert: yeah, exactly. So, you know, if you make the errors really small, you can make the keys and the ciphertexts a lot smaller.

So you get some performance benefit, but at the cost of maybe some, like, it’s not as clear about the security, for example, maybe. If you put it very high amount of algebra structure in there, as I was talking about before, you can represent, you know, your lattices with just one or two vectors. So that makes things small and faster to operate on.

But now you have like a lot of algebraic structure, or you go to the complete opposite spectrum— side of the spectrum there and have very little, or no algebraic structure. Now things are a bit bigger, but you have, you know, less algebraic structure that’s perhaps available for attack. so. As far as we know, all of the schemes are, nobody has found any like surprise attacks against the remaining schemes.

Like all the attacks it’s kind of been more or less in the realm of, of what we’ve known, how to attack these things for, you know, for, for quite a while, it’s basically like, apply your best lattice reduction to this thing and, you know, crank at for for long enough, and that’s what you get. But, I think there’s a ton of unexplored questions that maybe haven’t gotten as much attention as they should have, you know, from cryptanalysis, point of view.

Um, yeah. Well, okay. Another knob that there is, is like, do you use random errors or do you use rounding? So this idea of like with rounding, versus with errors, right? This is uh, just like the "with rounding" we introduced 10 years ago, this idea that you can replace the random errors with deterministic rounding.

Deirdre: Interesting.

Peikert: Okay. Very simple idea was not obvious. I mean like two decades of lattice crypto went by before this was actually proposed as an idea,

Deirdre: And why is that secure? Because as like a, as a systems implementer, I’d be like, if I have a choice, I’m going to put randomness in there. Not something that’s possibly deterministic every time.

Peikert: Yeah. Yeah. So that’s the big question. So the idea with rounding is like you’ve got some lattice points, you’re doing some encryption, you end up with some lattice point and then you’re supposed to add some error. And most of the schemes will add some error by just choosing random error from a small interval, maybe, you know, between plus minus 10 or something like that.

Right. And that’s, that’s what they add. And after adding the error, that’s your ciphertext and you send it along. but the alternative idea, this "with rounding", idea is you have the lattice points and then you just round it off to the nearest multiple of 10, let’s say, right? So, you know, if the number is 37, 62, 45, you round it to 40, 60, uh, 50. Right. And these are all multiples of 10. So you don’t, you can divide by 10 right? There’s no, th th this is, so this makes things smaller, right. Instead of having to add error and send the whole thing, you just rounded off and you kind of just drop the low bits that’s, that’s, what’s going on with that.

So, you know, why is this secure? I don’t know! Uh,

Deirdre: so it might

Peikert: in the patient. As far as, as far as we know, I mean, if I had to bet, I would say it’s probably, uh, nearly as secure as adding a comparable amount of error, but I don’t have a great deal of confidence in that. What we showed in this paper from 2011 was that rounding off a very large number of bits, so like dropping 128 bits or 200 bits is at least as secure as adding error that’s a few bits.

Deirdre: Huh!

Peikert: So

that’s not a very useful— I mean, it says if you do a ton of rounding, you’re at least as secure as if you did a tiny bit of random error.

Deirdre: Yeah.

Peikert: But that tells you nothing about how secure you are if you round off a tiny of error, right, do a tiny bit of rounding, which is what a few of the, uh, NIST proposals that are still in the process do. Um, so I don’t know if people have like, looked super carefully at that. I would like to encourage your listenership to like, think about, you know, is there a quantum attack against this deterministic rounding? I mean, is there something you can do that, that makes it faster, speeds it up? I don’t know.

Deirdre: If anyone publishes anything and they don’t cite this podcast episode in their references, I will be upset and I will find it because I look at, I think it goes up on

Peikert: eprint

we’re going to go reviewer #2 on that

Deirdre: Oh, very,

David: can you can put anything Atmos LaTech PIB tax citation. So just shove us in there.

Deirdre: Exactly. Cool, awesome.

Peikert: So I’m trying to get people to like pay attention to this question of rounding. Cause I think it’s, it’s, it’s the sort of, hasn’t gotten enough attention. and you know, maybe there’s some cool cryptanalysis out there.

Deirdre: Um, I’m going to pay attention and see, and then when that, I’m going to be annoying in specific locations and be like, why haven’t we investigated the rounding? The possible vulnerability? We do not know the security parameters of how much rounding that we’re doing!

Peikert: Yeah. so. typically when people, when people analyze it, they say, heuristically, you know, if you round off to the nearest, a multiple of 10, that’s pretty much like adding error, you know, between +/- five

Deirdre: love, love a "heuristic", and not a

Peikert: just model it that way. Yeah. And, but that, that like, kind of diff— like that says by definition, rounding is just as good random error.

Uh, and we’re, we’re just kind of like, that assumes away any possible between the So you, once you do that, you’re, you know, you’re, you’re getting security by assumption.

Is always

Deirdre: to All right. I’m going to keep an eye on that eprint.

All right.

David: One other question about the NIST competition. So they split it up into like KEM/DEM and something else. Um, and you know, traditionally, when I had thought

like KEM, key encapsulation, I think of like using RSA, to encrypt, like the pre-master secret in TLS. And then like, after that, like I kinda also just gave a presentation that said stop doing that.

Just like use Diffie-Hellman, and thought, like we’d kind of moved on of doing public key encryption. We’d been using public keys for signatures and public keys for, for key agreement. So are we, in post quantum world, are we thinking of using public key encryption again? Or am I, am I misunderstanding something about the competition?

Peikert: Yeah. the the KEM, like, so the KEM is an abstraction. It’s just kind of a syntactical contract, right? Of like, these are the algorithms, these are the interfaces, and the thing about a KEM is that instead of like picking the secret key explicitly you run this, uh, encapsulate algorithm is what it’s called, ‘encaps’; when you call encapsulate, it spits out a ciphertext and the underlying secret key. And when you decapsulate, so the, on the other side use, the secret key to de capsulate the ciphertext, and it spits out the same secret key that the encapsulator, uh, produced. So it’s just kind of an, it’s a nice abstraction actually, it says you don’t have to explicitly choose, you know, a secret key or anything. It’s just kind of handed to you as an output of a encapsulation process. And then, you know, you, and you use this as a symmetric key for, you know, your, your AES or your authenticated encryption, um,

Deirdre: Yep. And this is how I think i— we had SIDH and then specifically to fit this, API basically, uh, for the NIST competition, they produced SIKE which does this transform that just does S I D H uh under the hood. And then you do the, en- encryption, the encapsulation, then you check that you did everything correctly, but what’s what was nice that came out of that is there was a known attack against static SIDH. And by doing this transform into SIKE the SIKE form is resilient to a static attack. So we actually have, we actually came up with two birds with one stone almost. Thanks for the NIST competition.

Peikert: Yeah. And you can, you know, you can generically transform a KEM into a public key encryption and vice versa. So like they’re all isomorphic. It’s just like, what’s the API that, that these things are supposed to, uh, adhere to is the point.

Deirdre: Yeah. And I think part of it was so many of these post quantum, uh, protocol, like they didn’t really match, uh, like a public key, Diffie-Hellman-esque, protocol in general, like SIDH was kind of the only one that could in theory match, a key exchange or key establishment sort of protocol. All the rest of them were just, they just did not work for them that way.

So leaning towards an encapsulation mechanism, fit the, the broad array of, of proposals better.

Peikert: Yeah. I think it’s just kind of a more natural thing when you don’t have this like, I don’t want to say symmetric, but like Diffie-Hellman is symmetric, right? The two parties do the same thing and then arrive at, at a secret key. That’s not how any of, any of these things, uh, other things work. So there’s more of a, there’s

Deirdre: yeah

Peikert: roles, right? There’s like an initiator and a responder type of role to these things.

Deirdre: Cool. Awesome.

Why does it matter that Michigan beat Ohio? I know nothing about this.

David: well,

Deirdre: Everyone very excited, and I was— I had no idea why.

David: I’m going to say, hold that question, because I want to tell a story first, in Pier, Michigan, a Michigan fan. I want to tell a story first. Involves Chris cause it was in 2018. I was, uh, I was about to defend my PhD. This was about a week before my, less than a week before the final copy of my dissertation was due, and Michigan had just played Rutgers. and I’m leaving the stadium. It was a night game. I’m walking out via this path that happens to take you past where a lot of professors live underneath the bleachers for the field hockey stadium. And I hear someone say, "Hey, remember in 2016, when we beat Rutgers 76 to zero?", and then again, well, here you go.

I hear someone, someone in very Michigan fan class and fashion goes, "Actually, it was 78 to zero." And that person was Professor Chris Piekert, who I was like, "wait a minute, I didn’t know you were here too!" And he was like, "don’t you have a dissertation to be writing?" And I was like, "no, that’s a, that’s not a Saturday problem!"

Um, so there is no takeaway from the story other than, um, the cryptographers are also at the football games in Michigan.

Peikert: I remember exactly. As soon as you started, as soon as you said 2018, I know exactly story would be. Of course. it was, uh, it was a good, that was good. One. I think I was there with my, I think it was there with my son probably walking back and he may have been the one who mentioned 76-0

David: don’t know

Peikert: anyway, he had to go up for adoption

for that was

David: that will say that’s 78 to zero game has my favorite stat line game which is

Kalid Hill, three carries, three yards, three touchdowns.

Peikert: Three touchdowns. Perfect. Yeah, you can’t. Uh, that’s pretty, pretty efficient day for Mr. Hill,that that day.

David: So, I’ll hand it back to you to answer Deirdre’s original question, which is, why was everybody freaking out on saturday? for context, we’re recording this on Thursday, December 2nd. And we’re talking about

Deirdre: Yeah.

David: the previous Saturday,

but

Peikert: Previous Saturday.

It’s still very fresh in our minds and still floating on a cloud about this. So, you know, I have a few stories, uh, that that may completely bore or mystify, uh, the listenership here, but, you know, Michigan and Ohio state, colloquially known as Ohio, to us or that team down south, um, have a long history of rivalry.

And, uh, both teams are, you know, power football, Midwest, uh, you know, teams and, um, Ohio state has provided many coaches to Michigan over the years, including some of the most well-known and kind of storied coaches. And a lot of players come from Ohio. There’s some players from Michigan go to Ohio state and vice versa.

So there’s a lot of bad blood. There was a, there was a war, uh, over Toledo between Michigan

Yeah. A war. I mean,

how did it go?

David: I description of

No. There

Peikert: think Ohio lost and Ohio lost and had to take Toledo is what understand was

David: it depends on who you consider winning. They, they claimed they stole Toledo from us, even though we had it on the map. But in exchange, we got the upper peninsula, which turned out to have a lot useful stuff back then in that, namely iron.

Peikert: Exactly. So, so there’s a lot of, you know, a lot of bad blood between Michigan and Ohio and, uh, the rivalry has had its ups and downs between the two teams. So, you know, in the 19, uh, 1969 was this, this year where Ohio state had like supposedly the best team at best football team in history, right?

The very best. And, uh, Michigan had this new coach named Bo Schembechler and, uh, Michigan was playing Ohio state, you know, they’re the best team ever in their history and Michigan, they always play in the last week of the season, you know, the final, final, you know, conflagration and, uh, Michigan beats them 24 to 12 and this new coach’s first year. Uh, and, and so, you know, that was just a, you know, earthquake to to the college football world at the time.

So anyway, um, in the 1990s, which is when I was in a kind of growing up for going to, uh, seeing a lot of games, these were the, um, the best days of the Michigan Ohio state rivalry. Uh, there was this excellent, excellent coach at Ohio state named John Cooper who always had really good teams.

You know, they’d come in with maybe one loss or no losses at the end of the year and then play in Michigan, you know, they’d be up. And they would just lay an egg, Uh, Cooper, Cooper was two 10, and one, two wins, 10 losses and one tie in his 13 years at Ohio state. And he was basically fired for not beating Michigan.

I mean, he had a great record almost every year and they said, look, you can’t beat Michigan. Like get out. So in the nineties, uh, there were, you know, these great games that I was able to go to in the late eighties and everything through the Cooper years with, um, you know, various Michigan coaches. So it was a very reliable win for us, you know, we’d, we’d go up against a highly ranked Ohio state team and then just take their lunch money at of the year.

And it was awesome. So, yeah. So, um, around about the turn of the century, the turn of the millennium, uh, the tide turned as well and Ohio state, got in this mode where, you know, kind of all they cared about was to win football games and they got some really good and also really skeezy coaches, and they got a lot of good players and a lot of good coaches and it’s, it’s been a drought.

Uh, the last win against Ohio state for Michigan was, well, it was 2003 they won, I think. And got a win in 2011, but it was this, it was this weird year where it, uh, Ohio state was bad. They had some interim coach, then it doesn’t even really count

Deirdre: feel didn’t feel right

Peikert: yeah.

So basically one win in 18 years in this storied rivalry.

And, uh, so yeah. So last Saturday, Ohio state is this juggernaut, the ranked number two, they had just beaten Michigan state 49 to zero in the first half. Uh, and then one, one touchdown. Now who cares what happened the second half. They basically beat him by 40, 49 points, like almost instantly. And, um, you know, everybody was like, here we go again, right.

Another, you know, drubbing at the hands of Ohio state coming up and then they just destroyed them! I mean, they just flat out controlled the entire game. Could just, it was just a old-fashioned beat down.

Deirdre: Nice.

David: the way that if you were going to make a Homer like, feel good movie targeted at fans, it’d be like, what would we have happen Well we would have it—, there’s no way they can beat them. And then we would have them beat them in the most old fashioned, Michigan way possible, where a bunch of big dudes just hold Ohio state down and we run it up the middle and truck directly into people and they just can’t tackle us. Like nothing fancy, none of this spread offense stuff. Nothing, you know, you’re not

Deirdre: meat

and

David: like Joe burrow, just

push them out of the way

Peikert: Yeah. Until, until, uh, you know, push them forward for seven yards, 10 yards, 14 yards, and just keep pushing them until they don’t want to be there anymore. And then keep pushing ‘em even farther after that. So it was a real win with cruelty type of game, uh. But, you know, by the second half, like Ohio state clearly did not want to be there, were like, like, oh jeese, we don’t any part of this, but they had to finish and finish they did. So.

That was, that was, uh, that was a really a cleansing moment. And, uh, yeah, it gets Michigan into the first big, uh, conference championship game ever, ‘cause it 10 years ago

Deirdre: Oh,

okay.

Peikert: kind new thing, but like we’ve never

David: we haven’t been in the game because the game itself is new.

Peikert: yeah. And we haven’t won the conference in almost 20 years, I think. So. So it’s, it’s been a long time in the wilderness here. So we’re a cautiously optimistic about, about that. That’s the game in two days. So whenever this gets posted, people may or may not know the result.

Deirdre: let’s see. I’ll try and get it up as soon as possible.

Peikert: Great.

Deirdre: Cool. Thank you. Uh, I’m not a college football person. I’m barely a football person. So I saw everyone freaking out and I was like, oh, there’s a football game. Everyone is crying. Cause they beat, Michigan beat Ohio. Okay!

David: And for, you know, this was the most popular college football game on Fox, ever.

Peikert: In history.

Deirdre: I believe it in

Peikert: think 16 million people watched it. It was the number that came out

David: For what Michigan games just draw people out, they’re big moneymakers. Um, like they were just in a random bowl game a couple of years ago against Alabama. And that set the record for most watch college football games since the 2012 national championship.

Deirdre: Oh yeah.

Peikert: That’s really weird actually.

Deirdre: Yeah. I could see that, like I know shit about football and I know about

Peikert: Yeah. Yeah. So this is a weird year for college football altogether. I mean, the kind of the teams that have been at the top, you know, the top four or five teams are, they’re sort of still there, but they’re, they’re kind of, they’re mortal this year.

and so there’s a bunch of weird, weird teams up at the top now, and it’s going to be more interesting that way, I’m kind of glad to see it. Yeah.

Deirdre: This is a dumb question, but is Michigan D-1?

Yes

Peikert: They can, they can give scholarships.

David: D-1A or whatever the top half half of D1 is

Deirdre: right. Good. All right. at MIT, we had a D3 football team and like they finally made it into the playoffs one year. And that was big news because MIT football did not usually do well, even in D3

Peikert: Yeah. When I was there, I think, I think we had one good season when I and it was kind of like, oh, interesting.

Deirdre: yeah.

Peikert: MIT has a football team!

Deirdre: Yes. It’s literally, oh, MIT has a football team. We didn’t even realize.

Peikert: and they won game or maybe two Yeah. was

Deirdre: Uh, MIT is very good at like, uh, sports involving focus, like pistol. They’re very good at sports like that. They beat like the air force and the coast guard and the other military forces in like focusing real hard and shooting stuff. Not the football. Yeah.

Peikert: So my, my crossover, uh, I didn’t mention this before, but it’s kind of interesting the history of, uh, of going back to lattices real quickly. So lattices had an interesting history because the first things that you might consider lattice-based crypto, um, kind of came out in like the late seventies and early 80s. then they all got broken, uh, because people didn’t really know, like it just kind of had these ad hoc ideas, like, oh, we’ll embed this kind of structure in there or that, and use it for decryption so forth. And, the kind of structure that was added in turned out to be like completely, devastating, right.

Like opened it to total attacks. So this nice paper by Andy Odlyzko from the 1990s called, uh, from 1990, rather called, uh, the rise and fall of knapsack cryptography,

So, so now it’s like, the rise and fall and rise again, of, of uh, lattice crypto. It

Deirdre: lattices.

Peikert: know, it took two or three decades.

Deirdre: Did you do

Peikert: anyway, I

Deirdre: with that or with someone else? Did a talk with that title?

Peikert: maybe it wasn’t me, but it, it sounds familiar. So anyway, the

point is

Deirdre: it was about isogenies. Sorry. I’m sorry, go ahead.

Peikert: Uh, okay. Yeah,

David: that was the most on-brand mistake that, uh, to the point where I’m like, not even sure that wasn’t an intentional mistake. That was an honest mistake.

Peikert: Yeah. So anyway, like lattices had, you know, some false starts at the beginning too, you know, not as many false starts as Ohio state had last weekend, they didn’t have like in Hutchinson staring down there in their face or anything. So, yeah, so any, you know, any field can, can go from being, you know, dead to, uh, to rising from the ashes again.

Deirdre: So I guess the, the, uh, the class group actions, can’t count them out.

Peikert: can’t count them out.

Deirdre: Hmm. Awesome.

Peikert: need the right idea.

Deirdre: I’m, I’m always hopeful that, you know, we have a ton of systems that are based on all these classical assumptions, and we have to either come up with completely brand new things which fit the same needs, which is very hard, um, or we find ways to do them in a quantum secure setting.

And so I’m crossing my fingers that that sort of class group stuff could be done in an efficient, secure way. But, uh, I don’t think we’re there yet. Who knows? Maybe we’ll figure out a way to do some, something crazy with lattices because we can do all sorts of stuff with lattices, such as small, efficient zero knowledge proofs?

And I don’t

Peikert: be

Deirdre: if

that’s I want that, but, uh, we’ll see.

Peikert: small is not the first word you’re gonna get out of lattices any, uh, anything. I mean, they’re not big, but they’re certainly not small like we’re used to with elliptic curves and isogenies, unfortunately. Although, you know, maybe we’re just one, one or two ideas away from, from that.

Deirdre: I hope so.

David: If people want to get into lattice cryptography, are there good places to get started? or, or things to read?

Peikert: Yeah. There’s this great, uh, this is great survey by Daniele Micciancio and Oded Regev from 2008, uh, which is really accessible. It’s not too long. Uh, it gives you, you know, the kind of bread and butter basics. Um, it’s from 2008 and you know, some things have happened then the field. So, it’s not anywhere near up to date.

Nicely, all the key ideas that are in like modern systems, modern lattice systems are sort of the same ideas that were present in, in that survey. So we’ve just, you know, in that 13 years we’ve found like tons of other cool things you can do with the same core ideas. So that’s a really nice one to start with.

I can always plug my own survey if you’re like a grad student wanting to kind of get into the real nitty-gritty and the guts of things. I can drop a link to that, it’s on my webpage. Yeah.

There’s oh,

Deirdre: is that a decade of lattice cryptography?

Peikert: it’s called. Yes. Yes. It’s also out of date. I think it was published in 2015 or ‘16 or Um, it’s still, a good deal has happened since then. yeah, actually there’s a lot of good YouTube, uh, videos of the, like the Bar-Ilan had a winter school, Bar-Ilan University a winter school in 2012, I want to say, or maybe 2010 somewhere around there. Um, so a lot of good, like introductory talks, uh, laying out the, the main ideas and, um, with a lot of references there.

And, uh, I think there’s a lot of just, YouTube videos of conference talks or, or, you know, tutorials and things like that, that are, that are available. Uh, so I find that. I dunno, the, the millennials, like, uh, like the YouTubes for, for learning things, which is, which is cool.

Deirdre: millennials are old now.

Peikert: Whatever happened to— okay. Yeah. The zoomers’, okay. The zoomers like their YouTube’s.

Deirdre: The zoomers. Yeah, I, I liked the YouTubes. I like, I like diagrams and pictures and stuff.

David: This is a very visual podcast.

Peikert: Now talk Yeah.

Deirdre: Yeah.

Peikert: Talks or talks are great. Especially when you’re wanting to learn like the basic ideas, you know, you don’t want to get bogged down in, uh, all the details of a paper necessarily, but just get the, the key bearings.

Deirdre: Cool. Awesome. Anything else David,

David: I don’t know. I just like to say my name is Brian Cook and thank you for listening to MgoBlog Podcast.

Deirdre: Awesome. Thank you, Chris. Thank you very much for coming, uh, sorry for the internet problems. Uh, go Michigan. Is that right? Go blue. I’m sorry, go

Peikert: go go Yeah, we need it. We got it. We got another game in two days and hopefully, hopefully a couple more after that. That would be

Deirdre: Cool. All

Thank

Peikert: for the invitation. is a lot of fun.

Deirdre: Absolutely.

Peikert: David David, you invited me at like my peak level of, you know, happiness and amenability to agreeing to anything.

David: Yeah.

Peikert: So

David: That’s why,

why I did

Peikert: If you want, if you want me on a program committee, I don’t know why, but just Michigan’s next victory, send me program committee invitations and I’ll say, yeah, let’s do it.

Deirdre: Awesome. We know, we know the way to Peikert’s heart. Awesome. I’m hitting, I’m hitting pause